Data Pipeline 2 | Airflow Architecture and Directed Acyclic Graph

Data Pipeline 2 | Airflow Architecture and Directed Acyclic Graph

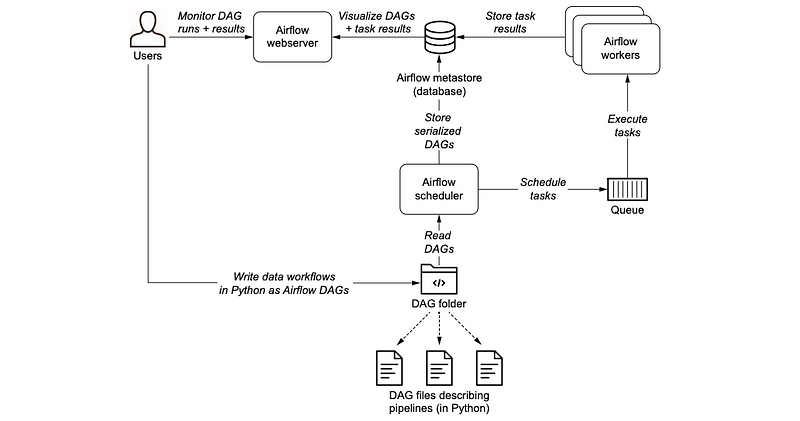

- Airflow Architecture

(1) The Components of Airflow

- Scheduler: handling the triggered workflows and submitting tasks to executors

- Executor: handling the running tasks. A local Airflow will build this executor inside the scheduler but for production, the scheduler will push tasks out to executable workers. There are two types of executors, local executors (e.g. debug executor, local executor, sequential executor) or remote executors (e.g. Celery executor, Kubernetes executor, Dask executor).

b. Remote Executors Celery Executor CeleryKubernetes Executor Dask Executor Kubernetes Executor

- Webserver: Airflow provides a web UI we have seen previously to inspect, trigger and debug the behavior of DAGs and tasks

- DAG Directory: Airflow provides a DAG directory

dags_folderwhere we can store our DAGs. We can also change this directory as we have discussed. - Metadata Database: This is a database used by the scheduler, executor, and webserver to store the DAG states.

(2) Three Types of Tasks

In the previous Hello World example, we have used the BashOperator , which can be used to execute bash commands. Now, it’s time for us to view more types of tasks.

a. Operators: these are predefined tasks that can be strung together quickly. Note that all the operators are inherited from the class BaseOperator.

BashOperator: used to create bash tasksPythonOperator: used to create Python callback functions tasksBigQueryOperator: used to create BigQuery SQL query tasksEmailOperator: used to create email-sending tasksDummyOperator: an operator that does nothing, and it is commonly used to group tasks

b. Sensors: Sensors are a special type of operator that are designed to wait for a specific event. All they do is wait until something happens, and then succeed so their downstream tasks can run.

HdfsSensor: used to wait for a file or a folder in HDFS

c. TaskFlow: this is a new feature in Airflow 2.0 and this allows us to decorate Python functions with @task without extra boilerplates. We will talk about it later.

(3) Tasks Control Flow

In Airflow, DAGs are designed to reuse and they can also run in parallel. In order to achieve this feature, the dependencies of tasks should be explicitly declared by >> or << operators. For example, if task_2 is depended on task_1 , we will have,

task_1 >> task_2

or,

task_2 << task_1

We can also declare this dependency by set_downstream or set_upstream methods. For example, if task_2 is depended on task_1 , we will have,

task_1.set_downstream(task_2)

or,

task_2.set_upstream(task_1)

These dependencies are what make up the “edges” of the DAGs that we will explain in a minute.

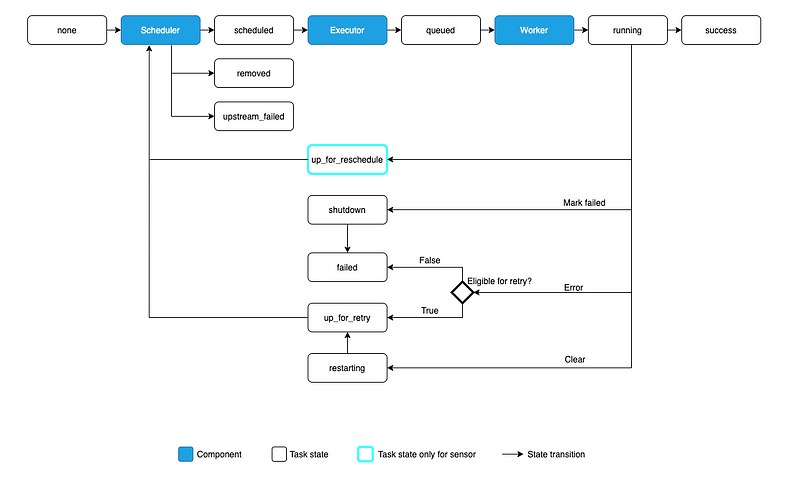

(4) Task Instance States

For each time a DAG runs, the tasks under this DAG are instantiated into task instances. A task instance can have several states representing what stage of the lifecycle it is in. The possible states for a task instance are,

none: the task hasn’t been scheduled yetscheduled: the task has its dependencies met and should be able to runqueued: the task has been allocated to an executor but it is waiting for the next available workerrunning: the task is currently running on an executorsuccess: the task finished running without errorsfailed: the task had an error during the executionup_for_retry: the task failed, but has retry attempts left and will be rescheduled.skipped: the task was skipped due to branching

2. Directed Acyclic Graph (DAG)

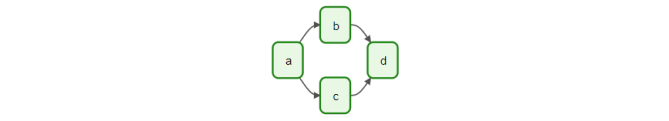

(1) The Definition of Directed Acyclic Graph

An acyclic graph is a graph having no graph cycles, and a directed graph is a set of nodes that are connected together, where all the edges are directed from one vertex to another. So a DAG is a graph that contains directed edges and does not contain any loops.

It is tricky that a DAG can have non-looping cycles. So the key point is that you should not only care about the shape formed by edges, but also the directions of the edges. For example,

(2) Features of DAG

A DAG is a container that is used to organize tasks and set their execution context. DAGs do not perform any actual computation. Instead, Operators determine what actually gets done. The DAG itself doesn’t care about what is happening inside the tasks; it is merely concerned with how to execute them — the order to run them in, how many times to retry them, if they have timeouts, and so on.

(3) DAG Creation

Commonly, there are three ways to create a DAG. First, we can use a context manager of with ... as ... structure,

with DAG("my_dag_name") as dag:

dummy = DummyOperator(task_id="test_task")Or we can use a standard constructor like,

my_dag = DAG("my_dag_name")

op = DummyOperator(task_id="task", dag=my_dag)We can also use the @dag decorator, but we will not display it here.