High-Performance Computer Architecture 3 | Introduction to the Computer Architecture, Moore’s Law…

High-Performance Computer Architecture 3 | Introduction to the Computer Architecture, Moore’s Law, Power, and Fabrication Cost

- Basic Definitions

(1) The Definition of Architecture

The concept of architecture means to design a building that is well-suited for its purpose.

(2) The Definition of Computer Architecture

The concept of computer architecture means to design a computer that is well-suited for its purpose.

(3) Goals of Computer Architecture

- Improve performance: speed, battery lifetime, size, weight, energy, efficiency.

- Improve abilities: 3D Graphics, debugging support, security

(4) Computer Architecture And Technology Trends

If we design computer architecture with the current technology then it will turn out to be an obsolete computer. To design future computers, we have to anticipate future technology and this expects us to predict what’s the technology trends and what can be available in the future.

2. Moore’s Law and Power

(1) Moore’s Law

Every 18–24 months, twice the number of transistors on the same chip area. The computer architecture will double the processor speed every 18 to 24 months and the energy/operation should be reduced by 1/2 every 18 to 24 months. Also, the memory capacity should be doubled every 18 to 24 months.

(6) Memory Wall

One of the consequences of Moore’s law is called the memory wall and here’s why we have it. The processor speed (in terms of instructions per second) can be expected to roughly double over about 2 years. The memory capacity (in terms of gigabytes in the same size module) is also doubling every two years. The memory latency represents the time it takes to do a memory operation has only been improving about 1.1 times every 2 years. As a result, there will be a gap between processor speed and memory speed and this is often called the memory wall.

Because this gap is enlarged a lot within the last couple of years, we have been using caches as a sort of stairs to the memory wall. So processors now are accessing caches (because these are fast) and only those rare access that is missing the caches will be going to the memory (which is slow).

(2) The Definition of Processor Performance

When we talk about the performance of a processor, we are basically talking about,

- SPEED

- FABRICATION COST

Lower fabrication cost allows us to put processors into some devices that can not be expensive in the market.

- POWER CONSUMPTION

Low power consumption is important not only because of the cost of electricity but also because it translates into longer battery life and smaller form factors like cell phones.

What we really want is the speed of the processor doubled every two years with the cost and power consumption stays about the same as before. But this always needs technology improvement. Actually, what we can also do is to get a small improvement in the speed (i.e. 1.1 times improvement) with about half the cost as the original processor and half of the power consumption of the original processor.

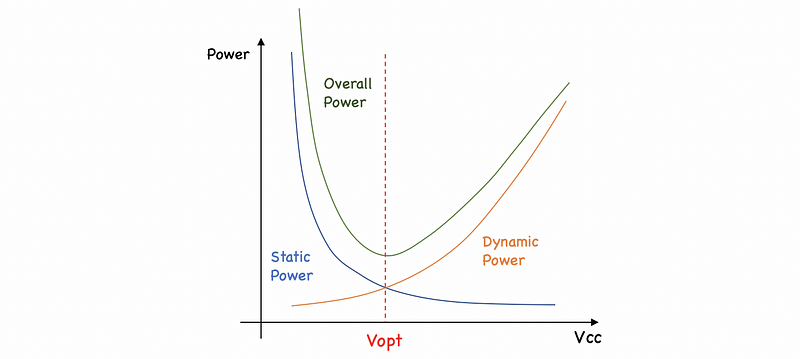

(3) More About the Power Consumption

There are really two kinds of power that a processor consumes,

- Dynamic Power (aka. active power): The power consumed by the actual activity in an electronic circuit.

- Static Power: Consumed when the circuit is powered on but idle.

(4) Dynamic Power (Active Power) and Moore’s Law

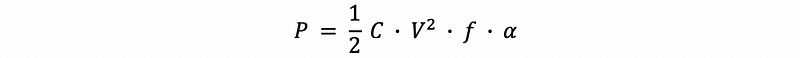

The active power can be computed with the following expression,

where,

Cis the total capacitance (roughly ≈ chip area) of the circuit, so the larger chips will have more capacitanceV²is the voltage square and this is the power supply voltage. The higher the power supply voltage, the higher the power is, and this relationship is quadraticfis the clock frequency and this is the gigahertz (GHz) that can be seen on a processor specificationαis the activity factor. Without thisα, we are assuming the processor is constantly switching every clock circle for all of its transistors. For example, theα = 10%means that only 10% of all the transistors are actually active in any given clock cycle

Now, let’s analyze the impact of Moore’s Law on active power. For every 2 years, the size of the processor to 1/2 of what it was so C' = C_old/2 . However, because we want to build a more powerful processor, we put 2 of them on the chip (put double cores), so generally, C_new = C' * 2 = C_old . Let’s say the voltage V and the frequency f stays at the same level and it keeps the same percentage of transistors α. This means that the active power is relatively unchanged.

In reality, the smaller transistors are going to be faster, so we have to increase the clock frequency of the processor too. So let’s say that the new clock frequency is 25% higher than the old one f_new = 1.25 * f_old , then the overall active power will be 25% higher than the old one.

However, the smaller transistors may also let us lower the power supply voltage while maintaining the same speed. So let’s say that the new power supply is equal to 0.8 of the old power supply voltage V_new = 0.8 * V_old and that allows us to have a significantly lower power consumption because of this square relationship P_new = 0.64 * P_old.

In practice, we don’t lower the voltage as much because we want a higher frequency. But we also won’t like the frequency to be too high because we don’t want to have high active power. Thus, we may choose the new voltage as V_new = 0.9 * V_old and f_new = 1.1 * f_old . If we now apply the equation, we are going to have a new power as P_new = 0.9 * P_old . So what we finally got is we have a chip that is having two cores instead of one and it has a lower power supply voltage but a higher frequency. So each of these cores is 10% faster than it used to be and yet we have lowered the power consumption.

(5) Static Power

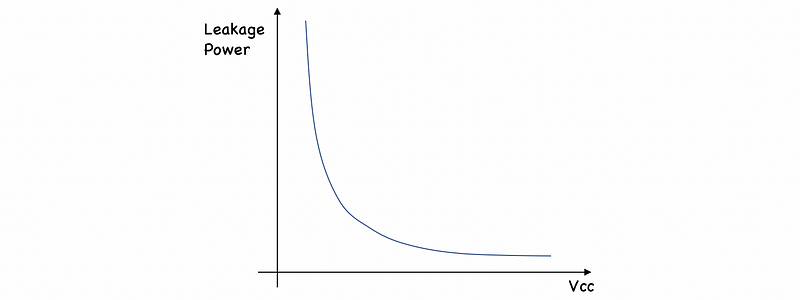

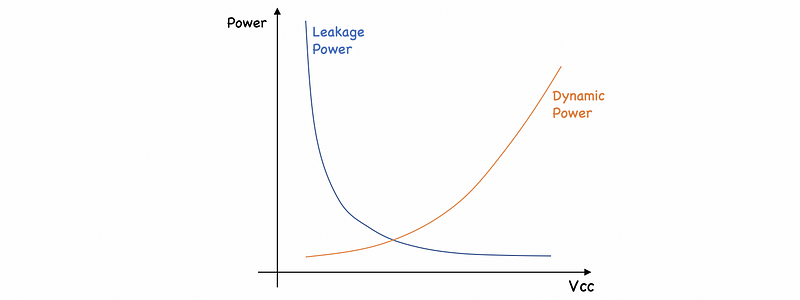

The main component of the static power is leakage power.

Let’s now consider the static power which is what prevents us from lowering the voltage too much. Remember we have talked about the transistors (or BJTs) from the computer system experiments as switches. Now, let’s consider the transistors as electronic faucets with their valves controlled another voltage or electronic flow.

However, the valves of transistors are not perfect.

When the voltage at the base is dropped, the faucet is now closed. But there’s a result of high pressure at the collector and the valve (base) is not perfectly closed, so there will be a leakage of water because the faucet is not totally closed. So one component of static power is leakage. When if we try to lower the voltage because we want to lower the dynamic power, then the valves will be badly closed and this results in a growing leakage, which results in increasing static power. This means that we have a higher leakage power when the voltage Vcc is lower and they are having a negative relationship.

Meanwhile, we also know that a higher voltage (aka. Vcc or V) will turn out to have higher dynamic power and they are quadratically related,

There are other components of static power, but they are relatively low to be considered in this case. Thus, we are going to use the leakage power to represent static power. So the overall power of a computer is,

Then, we can generate the overall power and find the optimized voltage for the computer.

3. Fabrication Cost and Chip Area

Let’s, first of all, watch a video about chip fabrication,

(1) The Chip Fabrication Process

- Step 1: Taking a silicon disk called a wafer and subjecting it to a number of steps and each of these steps prints some of the aspects of the circuit that should be appearing on each processor.

- Step 2: At the end of these steps, we take the wafer and cut it up into small pieces. And each of the small pieces is going to be a chip.

- Step 3: We take a chip and then put it into a package (with pins on it) and then we test these chips.

- Step 4: We test these chips. And for the chips that work well, we are going to sell them into the market. For the chips that are not checked out fine, we throw them away.

Note that the wafer is a silicon disk that is about 12 inches in diameter and costs thousands of dollars to put through the manufacturing process. At this point, it also means we hope to get a number of working chips from a signal wafer. Thus, the size of a chip (or the chip area) is relatively important to the fabrication cost because it determines how many chips we can get from a single wafer.

(2) Fabrication Yield

The fabrication yield is the percentage of chips at the end that we get to sell. So,

We will see that the size of the chip affects the yield so it is not only that we get fewer chips when they are big but also there’s a smaller percentage of them will work. This is because the wafer is not perfect and typically, a wafer has spots on it called defects. Also, some of the chips are not complete because of the roundness of the wafer.

(3) Fabrication Cost Example

Suppose manufacturing a wafer costs $5,000 and there are 10 defects per wafer. Now we would like to manufacture three sizes of chips,

- Small Chips: 400 chips per wafer

- Large Chips (4x small): 96 chips per wafer

- Huge Chips (4x large): 20 chips per wafer

For a small chip, the manufacturing cost is between $12.5 to $12.8 per chip,

By minimum: $5,000/400 = $12.5

By maximum: $5,000/(400-10) = $12.8

For a large chip, the manufacturing cost is between $52.1 to $58.2 per chip,

By minimum: $5,000/94 = $52.1

By maximum: $5,000/(94-10) = $58.2

For a huge chip, the manufacturing cost is between $250 to $500 per chip,

By minimum: $5,000/20 = $250

By maximum: $5,000/(20-10) = $500

(4) Fabrication Cost And Moore’s Law

Now we will have two strategies to the fabrication cost,

- Smaller chips with the same performance: reduce the fabrication cost of the chip production

- Same chip area with better performance: maintain the fabrication cost of the chip production