High-Performance Computer Architecture 18 | Memory Ordering

High-Performance Computer Architecture 18 | Memory Ordering

- Review

We have seen that using the ROB and Tomasulo's Algorithm, we can enforce the order of dependencies on registers between the instructions. The remaining question is that how we can conduct the memory access ordering.

Commonly, in the program, we have lots of load/store instructions. So our question is, should these instructions be done in the programming order or should they be reordered too. So far, we have eliminated,

- Control Dependencies by branch prediction

- False Data Dependencies of Registers by register renaming

- True Data Dependencies (RAW Dependencies) by Tomasulo’s algorithm

- OOE Exceptions by reorder buffer (ROB)

This section discusses a method for handling memory dependencies, the Load/Store Queue.

2. Memory Ordering

(1) Timing for Memory Write

For store instructions, the memory writes actually happens at the commit stage, because it is unsafe to update memory at any point before the instruction commits. An instruction that is not yet committed can be canceled because of a branch misprediction, so doing a memory write prematurely (before commit) might mean later we have to undo the accesses to the memory. Thus, we have to delay the memory stores until commit.

(2) Timing for Memory Read

We have known that the memory write should happen at commit. The question is that if we write the values on stores at commits, where does the load get data? In fact, we want our load instructions to get data as early as possible so that we can finish those loads and supply the data to the subsequent instructions depend on those loads.

(3) Load-Store Queue (LSQ)

The load-store queue (LSQ) is a structure like ROB (means that things will be put into this queue in order) for implementing the as-early-as-possible memory read and at-commit memory write. For each entry in an LSQ, there should be a 1-bit L/S field that tells about which instruction do we have (load or store), a field for what is the address that this instruction is accessing, a field for the value that instruction should be storing or loading, and there’s also a field for the showing whether or not this instruction is completed.

(4) Load-Store Queue (LSQ): An Example

Now, let’s see an example of the load-store queue. Suppose we have the following program,

LW R1, 0(R1)

SW R2, 0(R3)

LW R4, 0(R4)

SW R2, 0(R0)

LW R5, 0(R8)

And the registers and the memory status at the beginning should be,

After the LW R1, 0(R1) code, because we have a load and the load should get the data as early as possible, we directly achieve the data in the memory and complete this instruction.

Then we should run SW R2, 0(R3) . When we are doing so, the present instruction has to wait for the commit stage so it will not be marked as completed.

The next one is LW R4, 0(R4) and this one is a little bit tricky. The load instruction is going to load from the same address of the last save instruction which is not committed yet. So if the present load instruction load from the memory, it will get a completely wrong value. To deal with this problem, we have 2 options. One is to wait until the completion of the last save instruction. However, this can be inefficient because the save instruction will have to take a long time before commit. The other is to check the load-store queue each time before we load a new value from the memory. If there are save instructions with the same address that have not been completed yet, we can directly read from the last one before the current instruction.

For example, in this case, because the last instruction is to write to the same memory address we would like to read, we can directly load from the value of this instruction instead of going to the memory. This method is called store-to-load forwarding.

After the last two instructions, the LSQ should finally be,

(5) Exceptions for Store-to-Load Forwarding: Out-Of-Order Execution

Now, let’s think about another situation. It is entirely possible that because of the delay, a store instruction can have no address when a load is checking the queue. This situation may be caused by the as-early-as-possible rule for the load instructions. For example,

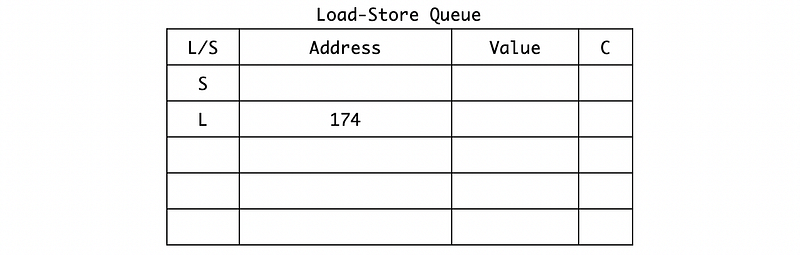

In the table above, the load instruction would like to check address 174 in the LSQ for store-to-load forwarding at the moment, but there appears to be a store instruction with no address. So the current load instruction can not know whether it should load from the memory or not. So here, we have three options to deal with this problem,

- OPTION 1. Wait for the store instruction to get its address. This means that we have to perform all the load/store instructions in order. When the store gets the address, we can compare it to the address needed by the load instructions and proceed. Although this idea is simple and easy for us, it can be slow for the processor and leads to unnecessary waiting.

- OPTION 2. Wait for all the previous store instructions. With the previous idea, we have to wait for all the load/store instructions to get the address. However, this can be improved because we can know that the load instructions won’t work for generating the address of the store instruction. Although this method improves some sorts of efficiency, it can still be

- OPTION 3. Directly goes to memory to grab the data. It is also okay if we ignore the possible dependency of the previous instructions and just load from the memory. This method is pretty aggressive and we are really possible to get wrong values from the memory. However, this method is good for efficiency. What we have to do is that if we read a wrong value from the memory, we need to recover the value and also supply this value to the later instructions. Most modern processors actually go with this option because it produces the best performance and the cost of recovery is affordable.

(6) Example Out-Of-Order Load/Store Execution

Suppose we have the following code,

I1: LW R3, 0(R6)

I2: ADD R7, R3, R9

I3: SW R4, 0(R7)

I4: SUB R1, R1, R2

I5: LW R8, 0(R1)

Assume that the address in R6 is not in the cache (means that we have to take a longer time to read from the memory), thus we can have an out-of-order execution for these instructions.

Firstly, the first load instruction I1 only depends on R6, then it is supposed to dispatch in the first place. But because R6 is a cache miss, it has to go to the memory for reading the data and it will take a while until this load comes back.

The instructions I2 and I3 only depend on I1, so they will wait until I1 returns. Because I3 is a store instruction, so the address can not be available until I1 returns.

Because I4 only depends on R1 and R2, it will be executed very quickly and then the load instruction I5 will then have the address stored in R1. When the I5 instruction dispatches, suppose the address in R1 is already in the cache, then this is going to be a cache hit. The value of this address will be returned very fast. However, before getting this value in the cache, we have to check the LSQ and make sure there is not a save instruction for the same address, or we will conduct the load-to-store forwarding.

But wait! Because we are stilling waiting for the address of the I3 store instruction, we actually can not figure out if need a load-to-store forwarding. If we use the option of going to the memory directly and grab the data, there can be two possibilities.

The first one is that the store address of I3 doesn’t match the load address of I5, so it will okay for us to read from the memory. However, the other one is that the store address of I3 matches the load address of I5, so the I5 actually loaded some stale value from the memory.

(7) Solution 1: In-Order Execution

At the point of dispatching the load instruction I5, we will check whether there are any proceeding instructions that might eventually result in the same address. Because the load instruction I1 and the store instruction I3 are not finished yet, we are also not going to execute I5 if we want to maintain an in-order load/store execution.

Of course, you can say that this solution is suboptimal if the store doesn’t store at the same address. We have been waiting at I5 for a very long time, and thus all of the later instructions depend on this instruction will be delayed. We will discuss the second solution of recovery later.

(8) End of an LSQ

Before we talk about the recovery solution, let’s first see how we can complete an LSQ. In the previous example, we only add the instructions to the LSQ without changing the data cache. Now, we are going to focus on how we can end an LSQ after the execution and write to the memory. Suppose we have the following LSQ and data cache,

Now, let’s start to commit these load/store instructions, and based on the at-commit rule, we can now write to the memory. We have said that we need to send the values to the memory or the data cache at the time the store commits not at the time it executes, and here’s why. If we want to take an exception when committing the instructions, we can flush to the front (youngest one) of the queue, and the data in the data cache or memory is exactly the value that should be at our program exception.

So finally, the LSQ and data cache should be,

3. Relationship Between LSQ, ROB, and RS

When we issue a load/store instruction, we need,

- a ROB (reorder buffer) entry

- an LSQ (load-store queue) entry

Then to execute a load/store instruction, we have to,

- compute an address

- produce a value

- if this is a load instruction, we have also to write-result/broadcast

- commit the instruction by free the ROB & LSQ entries

- if this is a store instruction, we have to send (write) the data to memory

When we issue a non-load/store instruction, we need,

- a ROB (reorder buffer) entry

- an RS (reservation station) for the right type