High-Performance Computer Architecture 32 | Memory Inconsistency, Sequential Consistency, Relaxed…

High-Performance Computer Architecture 32 | Memory Inconsistency, Sequential Consistency, Relaxed Consistency, Data Races, and Data-Race-Free Program

- Memory Inconsistency Problems

(1) Consistency Vs. Coherence

Before we discuss consistency, let’s first differ it from coherence. What coherence does is that it defines the order of accesses observable by different threads to the same address. In order to make the data on the same address the same in all the caches containing the corresponding block and in the memory, we have to maintain cache coherence. To allow coherence, we have to allow cache-to-cache transfer between caches.

However, memory consistency defines the order of accesses to different addresses. If we have out-of-order reads and writes from different addresses, the reader result we can get will be different from what we expect. Let’s see an example about why this actually matters.

(2) Memory Consistency: An Example

It turns out that consistency does matter and let’s see an example of why it matters. Suppose we have two threads A and B, and each of them will execute the following programs,

Thread A Thread B

============= =============

SW 1, D LW F, R1

SW 1, F LW D, R2

Suppose initially, we have both D and F in the memory with the value 0, then if we don’t have any synchronization, the behaviors we expect will be,

SW 1, D

SW 1, F

LW F, R1

LW D, R2

---------------

R1 = 1, R2 = 1

SW 1, D

LW F, R1

LW D, R2

SW 1, F

---------------

R1 = 0, R2 = 1

LW F, R1

LW D, R2

SW 1, D

SW 1, F

---------------

R1 = 0, R2 = 0

We can not get a result of R1 = 1, R2 = 0 because if we have R1 = 1, then it must mean that we have executed SW 1, D and the value of R2 must be 1. However, if we don’t maintain the order of access to different addresses, we can have an out-of-order load from the memory as follows,

Thread A Thread B

============= =============

SW 1, D LW D, R2

SW 1, F LW F, R1

If we have a real execution order like that, we can have the following actual behaviors,

SW 1, D

SW 1, F

LW D, R2

LW F, R1

---------------

R1 = 1, R2 = 1

SW 1, D

LW D, R2

LW F, R1

SW 1, F

---------------

R1 = 0, R2 = 1

LW D, R2

LW F, R1

SW 1, D

SW 1, F

---------------

R1 = 0, R2 = 0

LW D, R2

SW 1, D

SW 1, F

LW F, R1

---------------

R1 = 1, R2 = 0

Now, we can find out that we will have a case of R1 = 1, R2 = 0 that doesn’t exist in the expected behaviors. Note that we didn’t violate any rules of coherence, so this is what we called a consistency problem.

(3) Memory Inconsistency: Data-Ready Flag Synchronization

Now, let’s see another example. Suppose we have two threads A and B, and they are executing the following programs,

/* global */

flag = 0;

data = 0;

Thread A Thread B

===================== =====================

while (!flag) data = 10;

wait; data = data + 5;

print data; flag = 1;

Assume that we have perfect branch predictions with all stores strictly in the program order and if all the loads can be reordered. Because all the stores should be ordered, we have to keep the sequence of codes in thread B. The problem is in thread A when we have perfect branch prediction. When we predict that we will have flag = 1 in the future, then we are going to break the loop and fetch the data. However, at this moment, we can not make sure whether the data we fetch is 0 (thread B not executes), 10 (thread B executes 1 instruction), or 15 (thread B executes 2 or 3 instructions).

This inconsistency problem is called the data-ready flag synchronization.

(4) Memory Inconsistency: Thread Termination

Let’s see another inconsistency problem called the thread termination problem where

- thread A creates thread B

- thread B executed and updates the data

- thread A waits until B exits by a system call in thread A. This step will have branch prediction

- when thread B has done, OS will mark that B is done

So when we confirm the data, thread B can still execute because of the branch prediction. This example is quite similar to the data-read flag synchronization problem.

2. Memory Consistency Models

(1) Consistency Models

Let’s now introduce some of the consistency models. Commonly, there will be two models of consistency, the sequential consistency model, and a family of relaxed consistency models,

- Sequential consistency

- Relaxed consistency: some of the models that are mostly used are called weak consistency, processor consistency, release consistency, lazy release consistency, scope consistency, and etc.

(2) Sequential Consistency

For programmers, the most natural type of consistency is called sequential consistency. It is said that the result of any execution should be as if accesses are executed by each processor were executed in order, and accesses among different processors were arbitrarily interleaved. Therefore, we can do anything we want to interleave accesses from different processors. But what comes from each of the processors needs to be exactly in the program order.

So the simplest implementation of a sequential consistency and a core performs the next memory access only when all previous accesses are completely successful. This means that if we have the following program,

while (!flag) wait;

print data;

We can not access the memory to get the data until the while loop finishes. So then we will have no inconsistency problem.

However, there are drawbacks to the simple sequential consistency. If we issue the next access to the memory only when all prior accesses are complete, the memory level parallelism (MLP) can only be 1. In this case, because different accesses to the memory can not overlap each other, we will spend a lot of time loading the data from the memory and this is really bad for performance.

(3) Relaxed Consistency

An alternative approach that tries to create better performance is to actually relax the consistency so that we will not expect sequential consistency. Before we talk about the relaxed consistency, let’s first have a look at what we can do to improve the consistency. Suppose we have the following program that loads A and B,

LW A, R1

...

LW B, R2

If there is no save instruction between these two loads, we will have no inconsistency problem. However, if we have a load instruction between these two loads and the loads are executed out-of-order, then we will have an inconsistency problem.

LW A, R1

RD 1, A

LW B, R2

So basically, in a program, we have 4 types of read/write ordering,

WR A -> WR BWR A -> RD BRD A -> WR BRD A -> RD B

For sequential consistency, we must obey all of these four orders. However, for a relaxed consistency, the following order doesn’t have to be obeyed because it will not affect the result,

RD A -> RD B

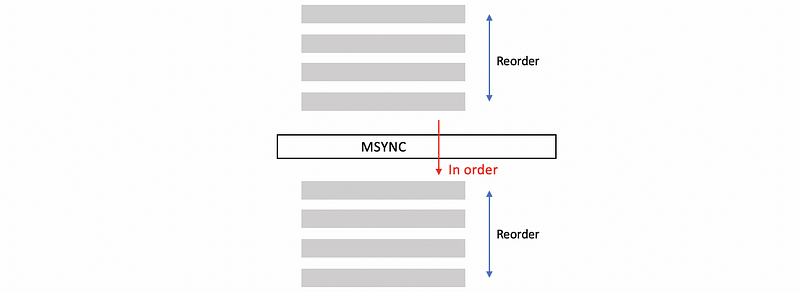

(4) msync Instruction

When we do relaxed consistency, we are allowed to reorder the normal accesses, and we will also add some special non-reorderable accesses by some system calls. An example for managing non-reordering accesses is the x86 msync instruction.

So now we will have all the accesses may be reordered, but none of them can be reordered across the msync instruction.

So now for the previous case, we can add a msync after while to maintain that the data is only read after the while statement.

while (!flag) wait;

msync;

print data;

3. Data Races

(1) The Definition of the Data Race

The data race occurs when accesses to the same address by different cores that are not ordered by synchronization. So to have a data race problem, we need to have one processor reading and another writing (RD -> WR), or the other way around (WR -> RD), or both of them writing (WR -> WR). Because we can hard to decide which one happens first and which one happens later without proper synchronization, we will then have data races.

(2) Data-Race-Free Program

A data-race-free program is a program that can not create any data races in its executions, and that program is considered to be well synchronized so that any accesses to the data are correctly ordered by synchronization.

A key property of a data-race-free program is that it will behave exactly the same in a sequential consistency even if we run them in any relaxed consistency model because the synchronization maintains the order of the programs. So the program can be debugged in a sequential consistency model, then run on a relaxed consistency model.

However, for debugging a non-data-race-free program, anything can happen because we have data race problems. The results can be really weird and this makes the debugging process very difficult. So some processors support sequential consistency only when debugging.