Linear Algebra 5 | Orthogonality, The Fourth Subspace, and General Picture of Subspaces

Linear Algebra 5 | Orthogonality, The Fourth Subspace, and General Picture of Subspaces

- Recall

(1) Different Subspaces

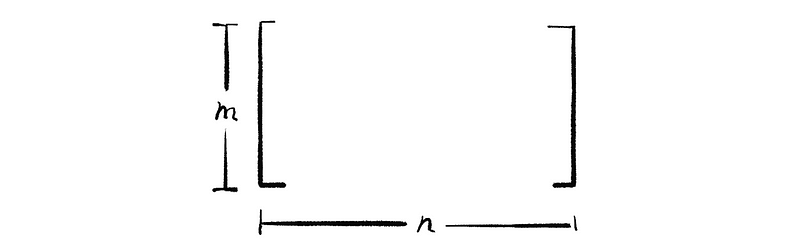

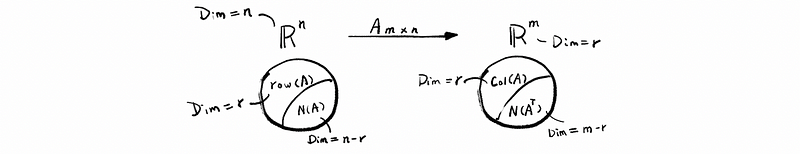

Let’s say we have an m × n matrix A with A: ℝⁿ → ℝᵐ as

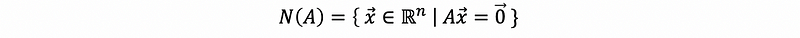

so that we have a null space N(A) is a subspace of ℝⁿ,

and we also have a column space Col(A) is a subspace of ℝᵐ,

and finally, we also have the span of the rows of A as a subspace of ℝⁿ,

It is also important to keep in mind this kind of unfinished graph that we have made in the last section.

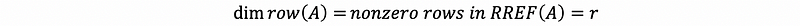

(2) Definition: Rank

When we change the matrix A to row echelon form, then we can calculate the rank of A, which is the number of pivots in REF(A), and it is notated by r.

(3) Definition: Basis

A basis for a subspace is a set of linearly independent vectors that span the subspace.

(4) Definition: Dimension

The number of vectors in a basis is called the dimension.

- the basis for Col(A) is given by the pivot columns of the original matrix A

- the basis for the row(A) is given by the nonzero rows of REF(A)

- the basis for N(A) is given by the special solutions to Ax = 0.

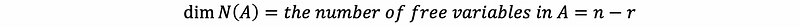

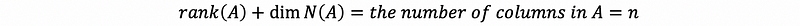

- rank nullity theorem

(5) A Quick Example

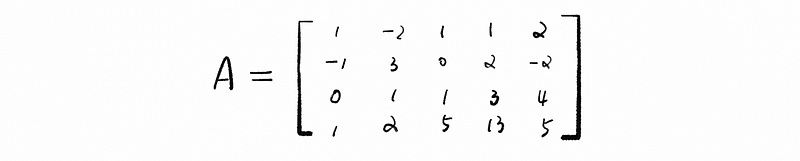

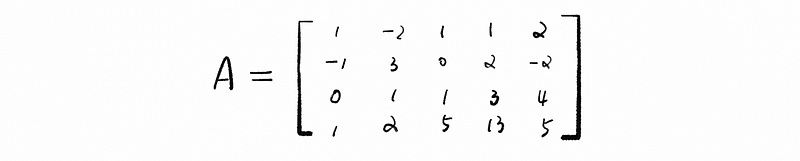

Suppose we have a 4 x 5 matrix A as follows,

Find the basis for the three subspaces.

Ans:

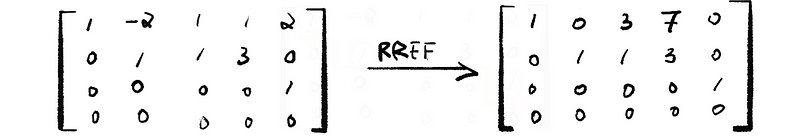

By row reduction,

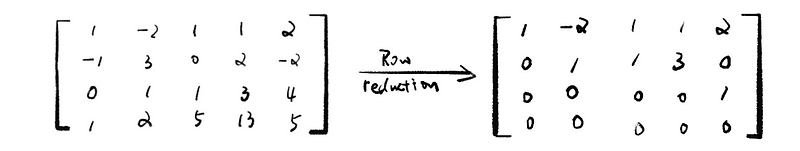

then, for the REF(A), we can have,

- rank = 3 = dim Col(A) = dim row(A)

- dim N(A) = 5–3 = 2

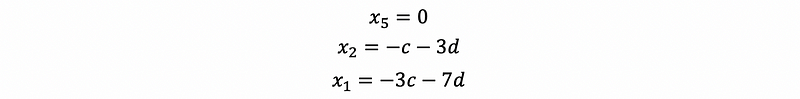

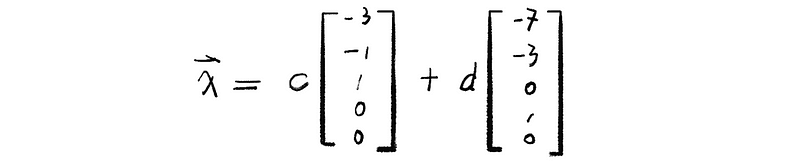

Now let’s start with N(A), the free variables are x3 and x4, and to compute N(A), we have to solve Ax = 0. Say x3 = c, x4 = d, then we have the back substitution as,

Finally, we can have the solution x as a result,

or we can also solve this equation by assigning c = 1, d = 0 and c = 0, d = 1.

So finally the vector [-3 -1 1 0 0] and [-7 -3 0 1 0] span the null space N(A), and it is also that the set of {[-3 -1 1 0 0], [-7 -3 0 1 0]} is a basis if this set is linearly independent.

2. The Proofs of Linearly Independent in Subspaces

(1) Recall: The Definition of Linear Independence

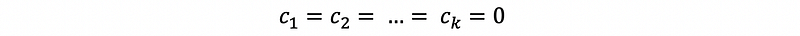

A set of vectors {v1, v2, …, vk} in a vector space V is linearly independent provided that,

(a) Perspective 1

whenever,

we must have,

(b) Perspective 2

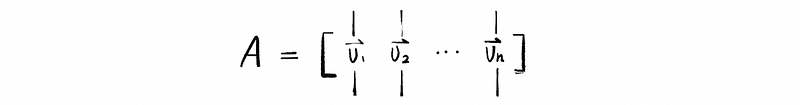

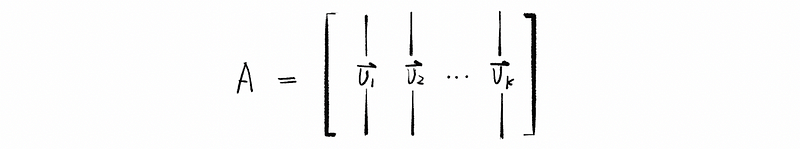

Another way to think about linear independent is that if I take {v1, v2, …, vk} and put them into a matrix A (as columns) as,

( the size of A is m × k ). Now we have the set {v1, v2, …, vk} is independent ⇔ Ax = 0 has only the trivial solution (x = 0) ⇔ N(A) = {0}

(c) Perspective 3

Another perspective is that because A is m × k, so A: ℝᵏ → ℝᵐ and A is a one-to-one function from ℝᵏ to ℝᵐ. A one-to-one function means that if Av = Aw, then v = w.

(2) Proof of Linear Independence in Null Space

Why those vectors are linearly independent, this is because that when you look back on the coordinates of these vectors, you can find that the only way to make x a trivial solution to 0 is to make c = d = 0, so that there is no way we can get this linear combination a zero without we get the coefficients themselves to be zero.

This is also because the third and fourth coordinates of those variables correspond to the free variables. So those are the c and d choices that we made. So by nature of these choices, if we look at these coordinates right on any linear combinations, the third coordination must be c and the fourth must be d. So that based on the definition of linear independence (perspective 1), we can draw the conclusion that the vectors in the null space must be linearly independent.

(3) Proof of Linear Independence in Column Space

Note that the nullspace tells us about linear independence relations on the columns of A. Because there is a non-trivial solution of Ax = 0. So by the definition of linear independence (perspective 2), we can then draw a conclusion that the column vectors in the matrix A must be linearly independent.

Also note that if someone asks you if a set of vectors is {v1, v2, …, vk} linearly independent, which is also to ask, whether the matrix A consisted by those vectors has a null space N(A) ={0},

You need to make sure that there are k pivots so that N(A) = {0} or dim N(A) = 0, maybe by doing row reduction, and get the row echelon form of A.

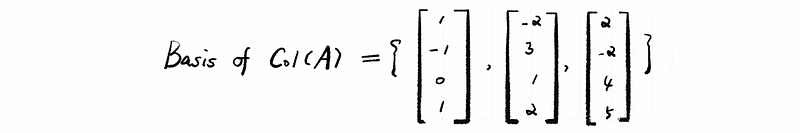

Now let’s write down a basis for Col(A). When we talk about Col(A), we have to take pivot columns in A, so we have,

Recall the RREF of A:

It is clear to see that the pivot columns are 1, 2, 5 and they are clearly linearly independent because if any linear combination of these vectors equal to zero, they will have to be the trivial solution and the only none trivial entries in these columns are pivots.

(4) Proof of Why The Span of Pivot Columns is a Basis of the Column Space

We have proved in (7) that all the pivot columns in a matrix are linearly independent. So now if we can also prove that this set is a basis, we have to prove that it spans Col(A).

Because the non-pivot columns (free variables) can be written as linear combinations of the pivot columns, that means we don’t need any non-pivot columns to get a span of A because they are already redundant and will not add anything new.

(5) From Pivot Columns to Non-Povit Columns

There are null space solutions that are precisely telling us what linear combination of pivot columns you need to take to get those non-pivot columns. So it tells us that we don’t actually need those free variable columns.

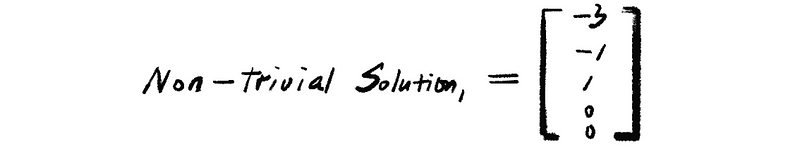

For example, in the last example, we have one of the non-trivial solutions of Ax = 0 as,

then we multiply the matrix A with this solution as

then we can have,

This means that the column vector a3 can be represented by a linear combination of vector a1 and a2. Thus, we are quite sure that the non-trivial solutions of Ax = 0 actually give us the linear combination of pivot columns you need to take to get those non-pivot columns.

(6) Proof of Linear Independence in Row Space

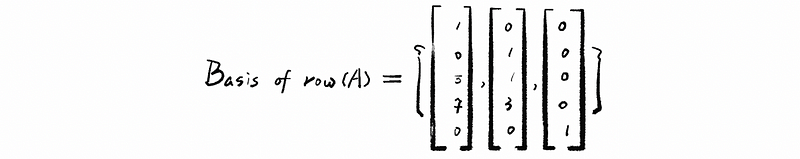

Finally, we can write down the basis of row(A). The basis of A is given by these non-zero rows of RREF(A). So the basis of the row space should be,

The proof of linear independence of the row space is quite similar to the proof of linear independence of a null space. By the reduced row echelon form, we can find out that the linear combinations of non-zero rows in A should not be 0 unless all the coefficients of this linear combination all equal zero, which is basically the definition of linearly independent in perspective 1.

3. The Orthogonality Between Subspaces

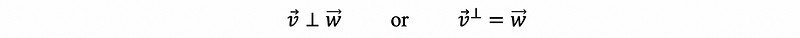

(1) Recall: The Definition of Orthogonality Between Two Vectors

Recall that the vector v and vector w are orthogonal if v · w = 0. This is notated as,

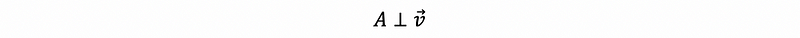

(2) Recall: The Definition of Orthogonality Between Vector And Subspace

Suppose ∀ vector v ∈subspace A, if a given vector w satisfies v · w = 0, then the vector w is orthogonal to subspace A. This is notated as,

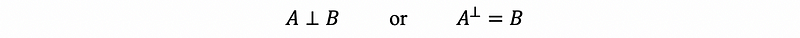

(3) Recall: The Definition of Orthogonality Between Two Subspaces

Suppose ∀ vector v ∈subspace A, and ∀ vector w ∈ B that satisfies v · w = 0, then the subspace B is orthogonal to subspace A.

In general, if W is a subspace of a vector space V, then the orthogonal subspace of W is defined as,

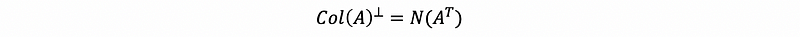

(4) Orthogonality Between The Null Space and The Column Space of Transpose

In the previous section, we have already proved that the nullspace is the space that perpendicular to the column space of A transpose in the vector space, which is also,

(5) Orthogonality Between The Null Space and The Row Space

Suppose we have vector v and w, and they are orthogonal if v · w = 0. For Ax = 0, we can then observe that,

We have already known that x is the non-trivial solution of Ax = 0, and x is also the basis of null space N(A). It is also clear that by definition, the vector of row in A is also a basis of the row space row(A). Thus, we can say that ∀ x ∈ basis of N(A), we can then have,

Based on the definition of orthogonality, we can observe that,

So for any vector v in the row of A, and any vector x in N(A), we have the property that v · w = 0. Moreover, they have complementary dimensions in ℝⁿ.

(6) Another Perspective of Orthogonality

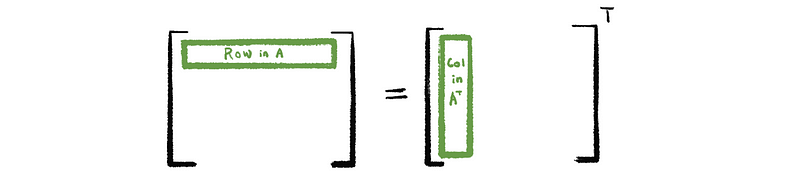

In the discussion above, we have discovered that both the row space of A ( row(A) ) and the column space of A transpose ( Col(A^T) ) are orthogonal to the null space of A ( N(A) ). This can be explained because the rows in A is equal to the columns in A transpose.

(7) Summary

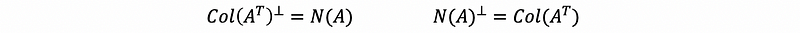

Based on the discussions above, we can have conclusions that,

and,

4. The Fourth Subspace

We have talked that we want to find a subspace with all its basis vectors ∈ ℝᵐ, and its dimension equals m-r. Could it be possible that we can find a subspace that suffices all those conditions now? Let’s think about A transpose.

(1) A Way to Find the Fourth Space

As we have proved that the row space of A is orthogonal to the null space of A, and they are both subspaces of ℝⁿ, so we can assume that the fourth space is also orthogonal to the column space of A and they are both subspaces of ℝᵐ. Based on the conclusion above, if we use,

then,

So it is quite possible that the nullspace of A transpose is the fourth subspace that we are going to find. Now, it’s time to prove this. We are almost there!

(2) The Definition of The Left-Null Space

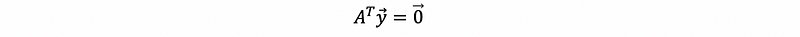

By definition of the nullspace, we can know that its basis is the set of all the non-trivial solutions. Suppose the given matrix is A transpose, then the equation that we have to work on is,

and in this case, y is our non-trivial solution.

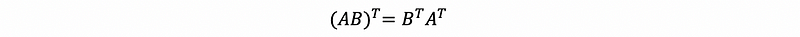

By the property of transpose,

so,

Based on the form of this expression, the null space of A transpose is sometimes called the left-null space of A.

(3) Proof of Linear Independence

Similarly, as we have said, the linear combination of the basis of non-trivial solutions can be zero only if all the coefficients are zero. Therefore, it is quite clear that all the non-trivial solutions are linearly independent and consists of the basis of the space of A transpose.

(4) The Dimension of The Left-Null Space

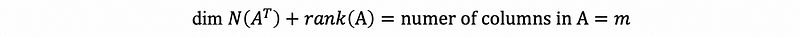

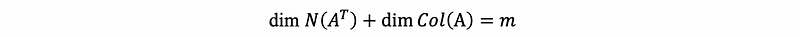

By rank nullity theorem, we can have,

then, because the rank of A equals the dimension of the column space of A, then,

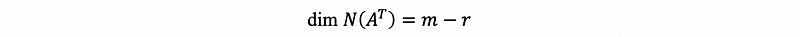

it is then clear that,

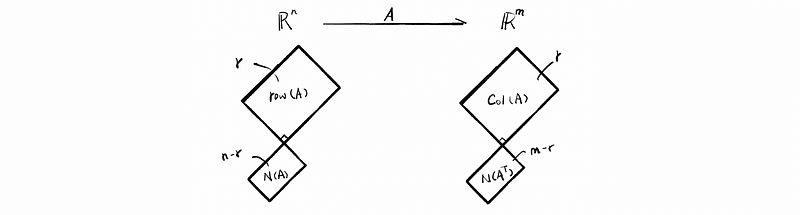

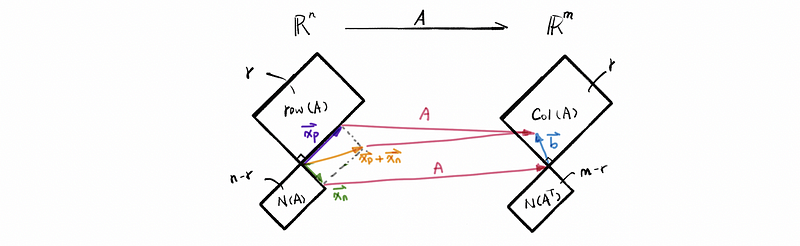

Thus the left-null space is the fourth subspace that we want to find. So finally, we can add it to our unfinished graph last time.

the fourth subspace that fills in the picture is the nullspace of A transpose, with m-r as the dimension. We can also add the orthogonality onto this graph as,

(5) The basis For Nullspce of A Transpose

Obviously, we have two methods to find a basis here.

- Option 1: do it

The first one is what we have done before. We can first find the transpose of A and then calculate its nullspace by solving the non-trivial solutions. But here we would like to introduce another method to find it.

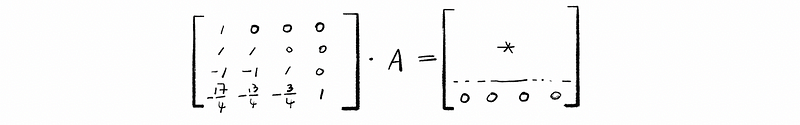

- Option 2: use row operations to find E · A = REF(A)

For example, we have our A as,

In our case, the A tranpose is 5 × 4 and rank = 3, so dim N(A^T) = 4 –3 = 1.

By doing row operations, we can then have,

if we multiply those elimination matrics together, we can then get an inverse of the lower triangular matrix of A as,

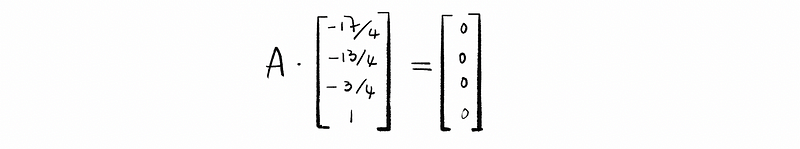

based on the result of the last line, we can then have,

so that it is equivalent to a non-trivial solution of Ax = 0.

(6) General Picture of Subspaces

What is the general solution to Ax = b? Suppose we have xp as a special solution and xn as the linear combination of the non-trivial solutions. We can have a graph as follows,

Then in general, we said the general solution to Ax = b is,

with,

and,

Particularly, if A is n × n with n pivots, then we can always solve Ax = b with a unique solution.