Linear Algebra 17 | A Bite of Advanced Topics for Linear Algebra

Linear Algebra 17 | A Bite of Advanced Topics for Linear Algebra

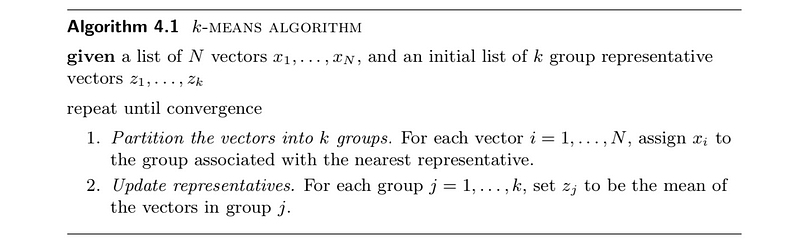

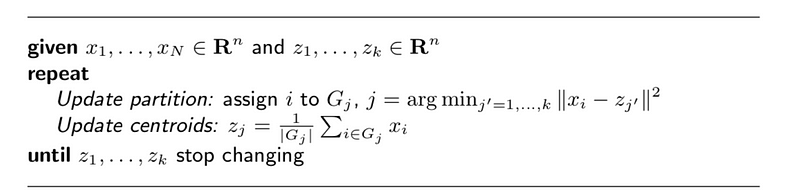

- k-Means

(1) Algorithm

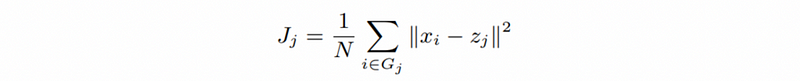

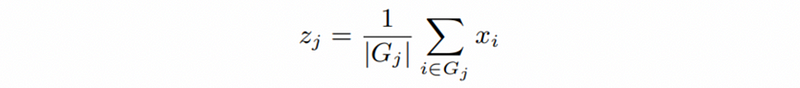

(2) Clustering Objective

(3) Mean Square Distance

(4) Centroid

(5) Pseudo Code

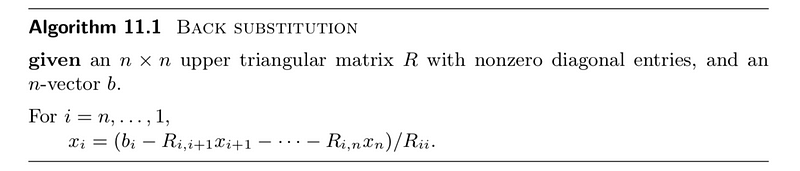

2. Back Substitution Algorithm

(1) Algorithm

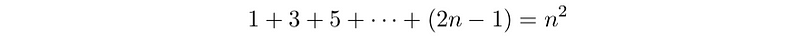

(2) Complexity

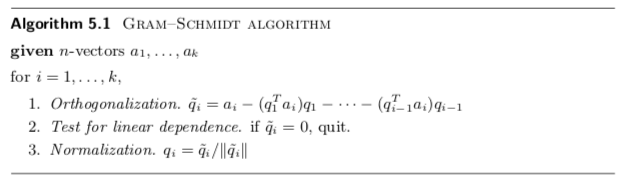

3. Gram-Schmidt Orthogonalization Algorithm

(1) Algorithm

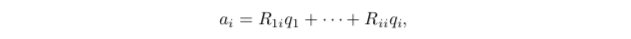

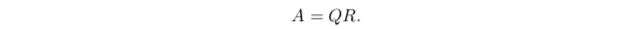

(2) QR Factorization

- Step 1.

- Step 2.

- Step 3.

(3) Complexity of the QR factorization

Suppose A is an m × n matrix, then there would be 2mn² flops.

Suppose A is an n × n matrix, then there would be 2n³ flops.

4. Inverse

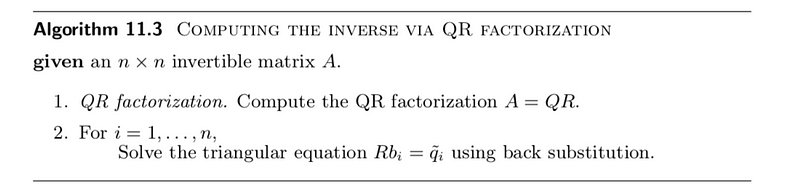

(1) Algorithm of Inverse via QR Factorization

(2) Complexity of Inverse via QR Factorization

Step 1. 2n³ flops

Step 2. n times n² flops = n³ flops

In total, with 3n³ flops

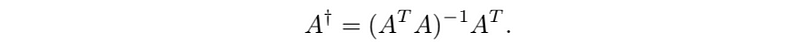

(3) Pseudo-inverse

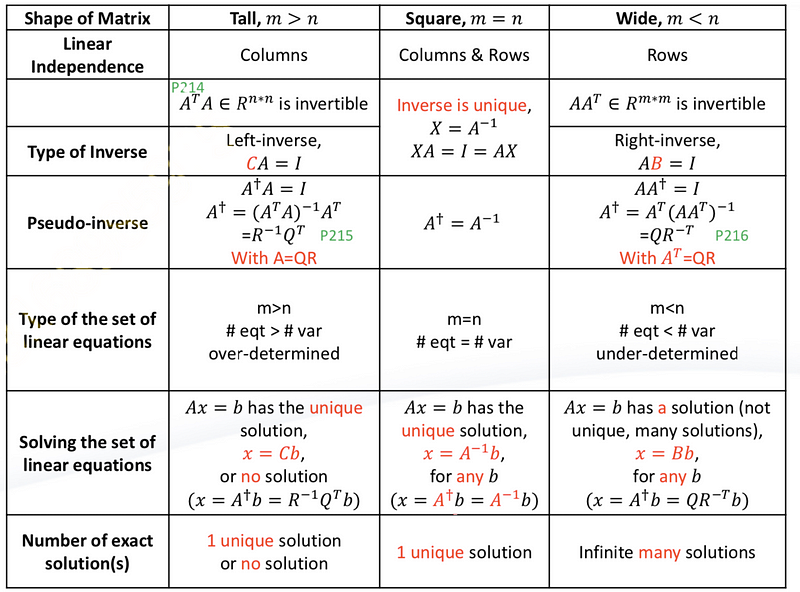

(4) Inverse Comparison of Matrics

5. Solving Linear Equations

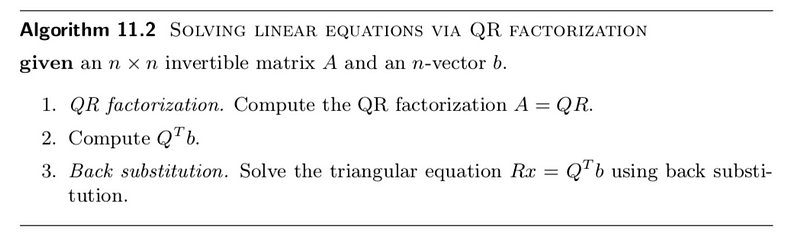

(1) Algorithm of Solving Linear Equations via QR Factorization

(2) Complexity of Solving Linear Equations via QR Factorization

Step 1. 2n³ flops,

Step 2. 2n² flops,

Step 3. n² flops,

In total,

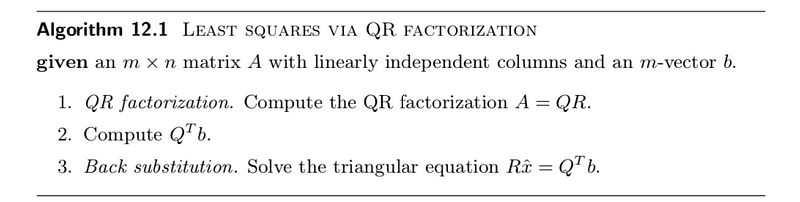

(3) Algorithm of Solving Least Square Problem via QR Factorization

Least square is a way to fit the parameters when there’s no solution for a linear equation system,

(4) Complexity of Solving Least Square Problem via QR Factorization

Step 1. 2mn² flops

Step 2. 2mn flops

Step 3. n² flops

In total, 2mn² flops

6. Least Square Problems

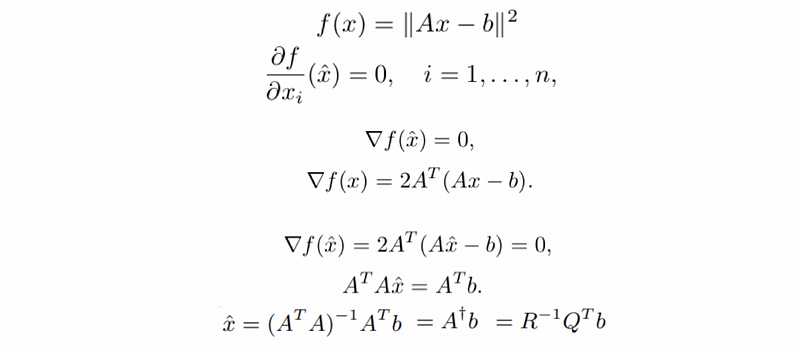

(1) Solving Least Square Problem via Calculus

(2) Least Square Data Fitting

Just now, we have used the least square to solve the linear equation system. Moreover, the least square can also be used to fit the data. This is also called the regression.

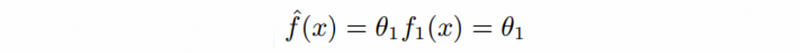

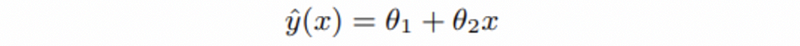

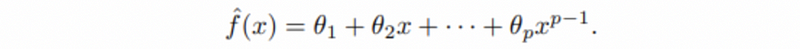

- constant model

- univariate model

- polynomial model

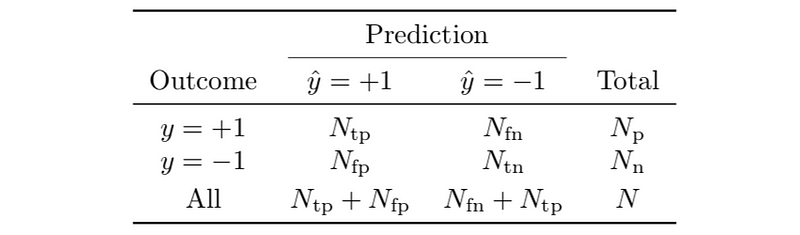

(3) Least Square Classification

- Error Rate

- True positive rate (also known as the sensitivity or recall rate)

- False positive rate (also known as the false alarm rate)

- Specificity or true negative rate

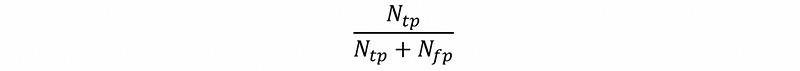

- Precision

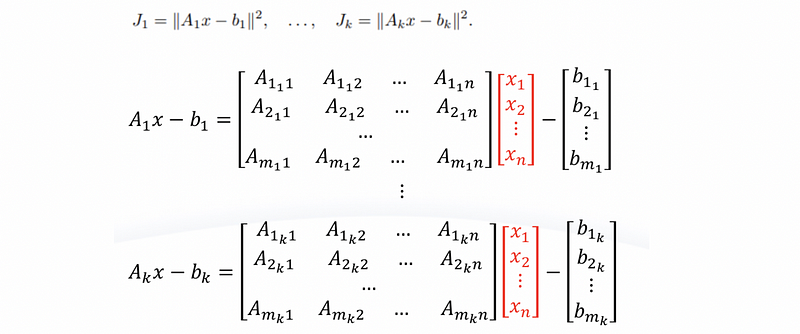

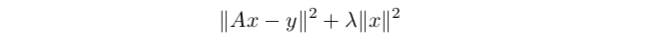

(4) Multi-objective least squares Problem

find a x-hat to make all the Ji’s small.

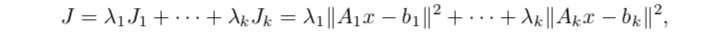

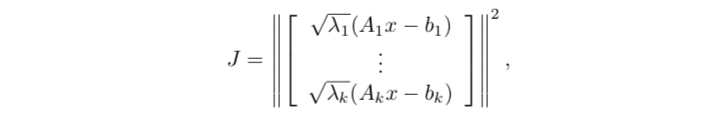

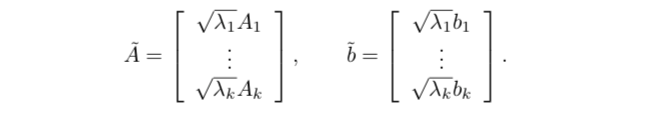

(5) Weighted Sum Solution for Multi-objective least squares Problem

The larger the weight of a term, the less we care about it.

Thus,

Then,

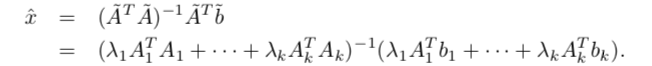

(6) Optimal Trade-off Curve

This is also called a bi-criterion problem. For a sum objective as,

Construct Pareto optimal,

Then draw the plot,

If J1 is too big, decrease weight λ. If J2 is too big, increase weight λ.

7. Regularization

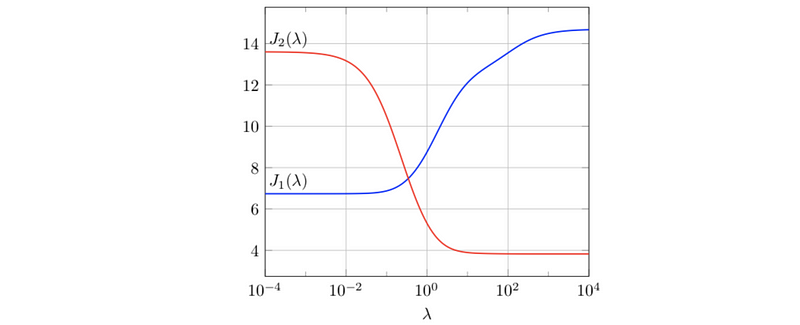

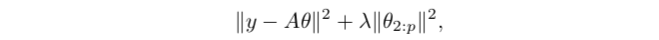

(1) Tikhonov Regularized inversion: to minimize,

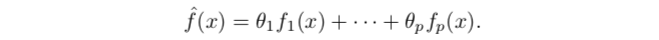

(2) Regulatrization: For model,

Note that the larger the parameters are, the more sensitive the corresponding f is.

then we should minimize,

Note that the smaller the parameters are, more less probability that there will be an overfitting problem.

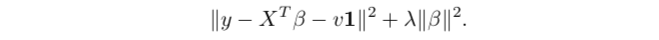

(3) Regression Regulatrization

8. Validation

(0) Sample Choose

train set (build model): test set (check model prediction) = 8:2 / 9:1

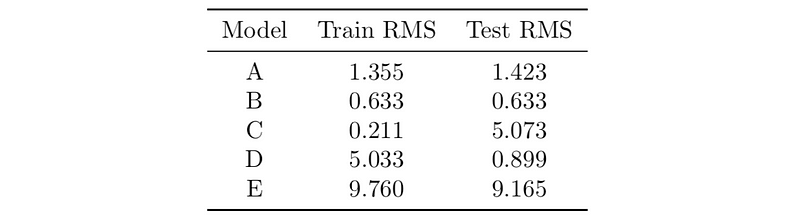

(1) Cross validation for the least square fit problem

- A model

This is a good model, and likely will generalise.

- B model

These results are suspicious, since it is unlikely that the train and test RMS errors would be so close. For example, maybe the model was accidentally tested on the training set. If the numbers are correct, then this is a very good model, and would likely generalize.

- C model

The model is over-fit.

- D model

Something is wrong, or you are lucky. Probably the former.

- E model

This is a bad model, but will likely generalise.

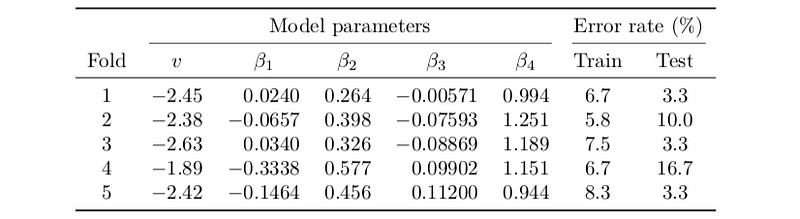

(2) Cross validation for the least square classification problem

- 1/3/5 model

These are good models, and likely will generalise. model #1 is the best.

- 2/4 model

The model is over-fit.

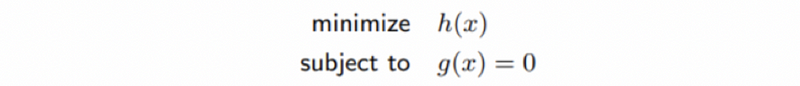

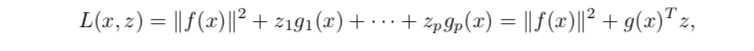

9. Constrained Least Squares

(1) Constrained Least Squares Problems

(2) Lagrange multipliers

(3) KKT conditions

(4) Solution by KKT conditions

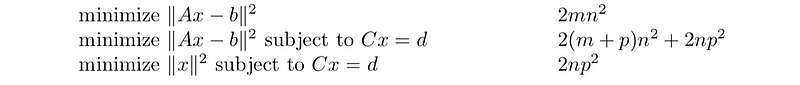

10. Complexity Cheatsheet

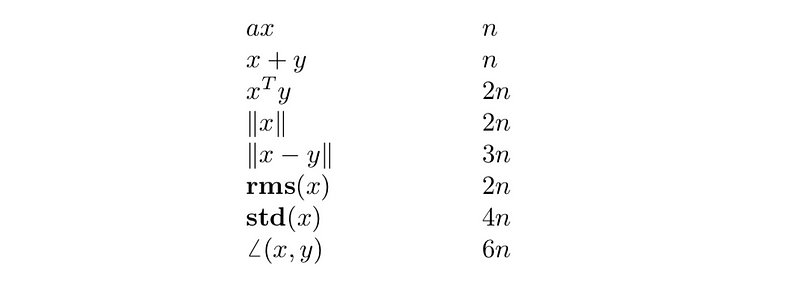

(1) Vector operations

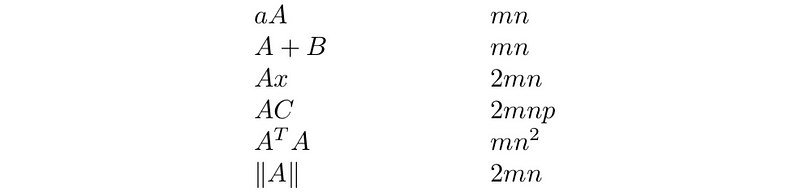

(2) Matrix operations

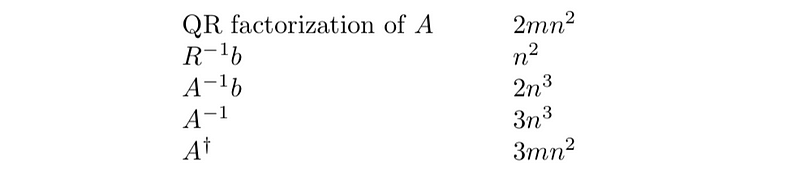

(3) Factorization and inverses

(4) Solving least squares problems