Operating System 1 | Introduction to Operating System, User/Kernel Protection Boundary

Operating System 1 | Introduction to Operating System, User/Kernel Protection Boundary, and Different Types for Operating Systems

- Introduction to Operating System

(1) The Definition of Operating System

In simplest terms, an operating system is a piece of software abstract and arbitrates the underlying hardware system. In this context, abstract means to simplify what the hardware actually looks like, and arbitrates means to manage and oversee the controlling of the hardware.

Note that the cache memory is not managed by the operating system. Instead, it is managed by the hardware.

(2) Three Functions of Operating System

- Direct operational resources: means to control the use of CPU, memory, peripheral devices and allocate resources to different applications

- Enforces working policies: means to have fair resource access, and impose certain limits to allocate the maximum amount of a certain resource that a particular application or process can use. For example, the limits like the number of opening files that can be opened per process or some thresholds that need to be passed in order for some memory management daemons to kick in.

- Mitigates difficulty of complex tasks: it simplifies and abstracts the view of the underlying hardware that’s observed by the applications that are running on that particular platform. In reality, the applications ask for system calls and the OS takes care of how to manage them properly.

(3) Goals for Operating Systems

- Privileged Access: the operating system is a layer of system software that can directly have privileged access to the underlying hardware.

- Hide hardware complexity: the operating system hides the complexity of the underlying hardware from the applications and the developers. For example, we don’t have to worry about memory or disk storage when we are using an application. Also, the OS hides the various types of storage devices. So hard disks like SSDs or USB flashes are managed to be abstracted as files and we can R/W to the files. Meanwhile, for a web server application, when we are responding to the requests, we don’t want to think about the bits and the packages that we need to compose when performing that application. The operating system abstracts the networking resources and these resources are abstracted as sockets and we can send or receive these sockets.

- Resource Management: for example, the OS decides how many and which one of these resources will the application use. For instance, the operating system allocates the memories for these applications, and also it is one that schedules these applications onto the CPU.

- Provide Isolation & Protection: The operating system must ensure that each application can make adequate progress and they don’t hurt each other. Thus, it will provide isolation and protection. For instance, the operating system will allocate different parts of the physical memories to different applications. It also ensures that each application doesn’t access each other’s memory.

(4) Operating System Examples

Now, let’s see some of the operating system examples. We will choose the operating systems for the desktops and the embedded systems because they are quite common nowadays,

- Microsoft Windows

- Unix-Based: Mac OSX (BSD), Linux, …

- Android

- iOS

- Symbian

- …

(5) Operating System Elements

To achieve its goals, the operating system supports a number of higher-level abstractions and a number of key mechanisms that operate on top of these abstractions. The abstractions include,

- Process

- Thread

- File

- Socket

- Memory page

The mechanisms include,

- Create

- Schedule

- Open

- Write

- Allocate

Operating systems may also integrate specific policies that determine exactly how these mechanisms will be used to manage standalone hardware. For instance, the policies can control what is the maximum number of sockets that a process can actually have access to. They may also control which data will be removed from physical memory based on some algorithm like the least recently used (LRU) or earliest deadline first (EDF).

(6) Principles for Designing Operating System

Principle #1: The separation between mechanisms and policies

This means that we want to incorporate into the operating system a number of flexible mechanisms that can support a range of policies. For memory management, some useful policies include LRU (least recently used), LFU (least frequently used), or completely random. That means that we have to find some mechanisms that are used to track the frequency or the time when the memory locations have been accessed.

Principle #2: Optimizing for the common case

Optimizing for the common case means that we need to understand a number of questions like,

- How the operating system will be used?

- What it will need to provide in order to understand what the common case is?

- Where the operating system will be used?

- What kind of machine it will run?

- How many processing elements does the machine have?

- How many CPUs?

- What kinds of devices?

- How much memory?

- What are the commands the end-user will use on that machine?

- What will be the applications being executed on the machine?

- What are the requirements of that workload?

- How does that workload behave?

- etc.

(7) Operating System Servies

The operating system provides many services that can be used to interact with the hardware,

- Scheduler: for controlling the access to the CPU

- Memory Manager: for allocating the underlying physical memory

- Block Device Drive: for a block device like a disk

There are also some higher-level services that are linked with higher-level abstractions. For instance,

- File System

Some other services are provided to the applications or developers with a number of useful types of functionality. This includes,

- Process Management

- File Management

- Device Management

These services are verified through system calls.

2. User/Kernel Protection Boundary

(1) User Mode and Kernel Mode

As the definition of the operating system pointed out, the operating system must have the privileges to have direct access to the hardware. Computer hardware distinguished into two modes. The privileged one is called the kernel mode and the unprivileged one is called the user mode.

(2) User-kernel Switch

In order to have direct access to the hardware, the operating system must run in the privileged kernel-level mode. However, the applications should be running on the user mode because of the isolation issues. In practice, most of the modern computers can conduct the user-kernel switch by the supports from the hardware.

(3) User-kernel Switch Example: Instruction Trap

A specific example of the user-kernel switch is the instruction trap (and we will talk more about it later). When there’s an attempt from the user mode to perform privileged operations, it’s probable that there will be an instruction trap. A trap, also known as an exception or a fault, is typically a type of synchronous interrupt caused by an exceptional condition. Then, the applications will be interrupted and then the hardware will switch the control to the operating system at some location. And then the operating system will verify if it should run that access or it should terminate the process to prevent something illegal.

(4) User-kernel Switch Example: System Call Interface

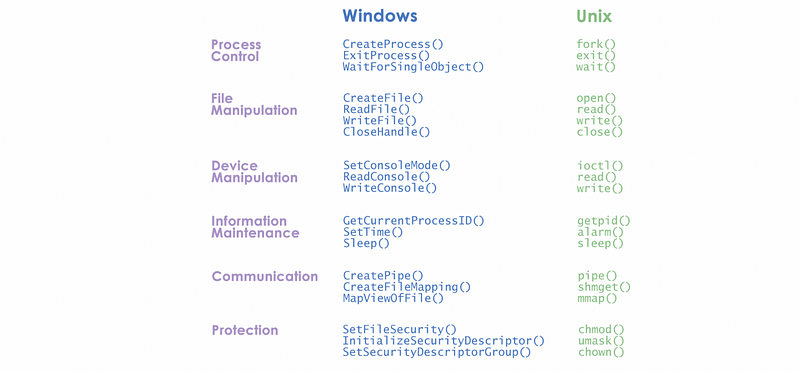

In addition to this trap method, the interaction between the applications and the operating system can be conducted via the system call interface. The operating system exports a system call interface so the application can also call the hardware through the operating system. The most common system calls include open for files, send for sockets, and mmap for memory. Here’s a summary of the commonest system calls for Windows and UNIX,

To understand the process of a system call, let’s see an abstracted workflow of it,

- Step 1: Let’s start with the user mode (mode code = 1). Because the user application/process needs some hardware access, it then makes a system call.

- Step 2: To interact with the hardware, we have to now change to the privileged mode (mode code = 0) and then the operating system will perform the operation, and then it will return the result to the process. This process involves changing the execution context from the user to the kernel and also passing arguments whatever necessary for the operating system.

- Step 3: While the system call completes, it will return the result and the control back to the calling process. And this again will involve changing the execution context from the kernel mode (mode code = 0) back to the user mode (mode code = 1), passing any arguments back into the user space, and jumping to exactly the same location where the system call was made from.

Note that there is also a synchronous mode that the user process that will wait until the system call completes and we are going to talk about this later.

(5) User-kernel Switch Example: Signals

An operating system also supports signals, which is a mechanism for the operating system to pass notifications to the applications. We will talk about it later.

(6) Cost of User-Kernel Transitions

From the system call workflow above, we can see that the user-kernel transitions are necessary steps during an application execution because applications must have to perform access to certain types of hardware. In fact, these user-kernel transitions are not cheap.

Firstly, the hardware should support these transitions. We have talked about the instruction trap made by the hardware to control the illegal instructions or memory accesses.

Secondly, to perform the traps, there also needs to be a number of instructions. And of course, it takes time for the machine to perform all these operations.

Thirdly, these transitions can affect the cache usage of the hardware. Because context switches will swap the data/addresses currently in the cache, the performance of applications can benefit or suffer based on how a context switch changes what is in cache at the time they are accessing it. A cache would be considered hot if an application is accessing the cache when it contains the data/addresses it needs. Likewise, a cache would be considered cold if an application is accessing the cache when it does not contain the data/addresses it needs — forcing it to retrieve data/addresses from the main memory.

(7) System Call Examples

Let’s now see some examples of the system calls for a 64-bit Unix machine. You can find all the system calls from this site and answer the following questions.

Which system call is used to send a signal to a process?

kill

Which system call is used to set the group identity of a process?

setgid

Which system call is used to mount a file system?

mount

Which system call is used to read/write system parameters?

sysctl

3. Different Types for Operating Systems

(1) Monolithic OS

The monolithic operating system is a very basic operating system in which file management, memory management, device management, and process management are directly controlled within the kernel. Historically, an operating system has a monolithic design. For instance, the monolithic operating system may include several possible file systems, and one is specialized for sequential workloads while the other is for random I/O (common with database).

So the main point of the monolithic OS is that everything is included in the kernel of the operating system and everything is packaged at the same time. However, the monolithic OS has too many states, and too many codes that are hard to maintain and debug.

(2) Modular OS

A more common approach today is the modular OS as the Linux operating system. This kind of operating system has already had some basic services and some of the APIs as some parts of it. More importantly, everything can be added as a module.

Depending on the workload, we can install a module that implements this interface in a way that makes sense for this workload. The advantages of this OS are that is easier for us to maintain and upgrade, and it also has a smaller codebase with fewer resources intensive (this leaves more memory for the applications). However, because we have to pass through the module, the interfaces can reduce some opportunities for optimizations (not very significant). Some of the modules can come from self developers and may contain a lot of bugs, so the maintenance of the modules is still a big issue.

(3) Microkernel

The microkernel is a kernel that requires only the most basic primitives at the operating system level, for instance, at the OS level, the microkernel can support some basic services like to represent an executed application (the address space and the context threads). All the other components (i.e. database) as well as software (i.e. file system and disk drivers) that we typically thinks as a part of the operating system will run out side the kernel at the user level.

The benefit of a microkernel is that it is very small and verifiability that can be used for some embedded systems or some sorts of the control systems. The down sides of the microkernel design are that although it is small, it’s portability questionable because it’s typically very customized to the underlying hardware and this also leads to the software complexity.