Operating System 6 | Thread Part 2, Reader-Writer Problem, Spurious Wakeups, and Deadlocks

Operating System 6 | Thread Part 2, Reader-Writer Problem, Spurious Wakeups, and Deadlocks

- Mutex And Condition Variable

(1) Reader/Writer Problem

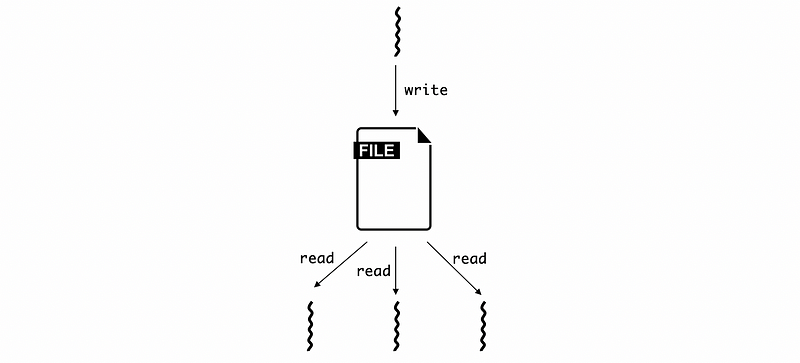

Reader/writer problems can be common in a multi-threading system as a combination of mutex and condition variables. Some of the threads operations try to access the shared state for reading, while other threads would like to perform write operations to that same shared state. Suppose we have 0 or more threads read from a file and 0 or 1 thread for writing to it.

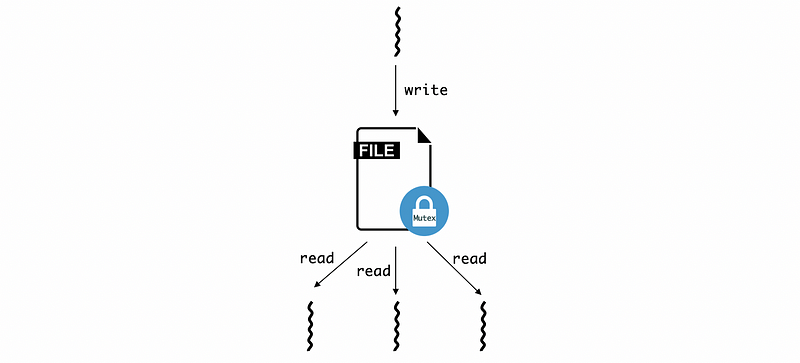

To avoid concurrency problems, we may want to lock the access to the file by a mutex, so that only one thread can have access to this file at a time.

However, this mutex lock can be too restrictive for a reader/writer problem. Even though the writing thread is precisely restricted to a binary state (1 thread or 0), the reading threads are also restricted to 1 thread at a time. But we are not allowed to have multiple reading threads at a time, which should be allowed.

(2) Solution for Reader/Writer Problem

The solution for this r/w problem is that we can add thread counters to count the number of the reading threads and the writing threads. For instance, suppose we have the counter read_counter for counting the reading threads and write_counter for counting the writing threads. Then,

if (read_counter == 0 ANDwrite_counter == 0) {R ok; W ok;}

if (read_counter > 0) {R ok; W no;}

if (write_counter == 1) {R no; W no;}

In reality, we are going to construct a counter for our resource and there will be three states for this counter, free, reading, writing.

// free state

resource_counter == 0;

// reading state

resource_counter > 0;

// writing state

resource_counter == -1;

This counter is also called a predicator or a proxy variable, which can be used to show the states of the threads.

(3) Reader/Writer Example

Now that we have constructed our condition variable for this problem, it is time to implement the readers and the writer. For readers, we will first lock the resource and see whether we can access the file by checking the condition variable resource_counter. If the resource_counter is set to be -1, which means we can only write to the source by the writing thread, then this reading thread will call Wait to wait for a signal read_phase.

Condition read_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter == -1) Wait(m, read_phase);

}

Once we get the signal of read_phase, the current reading thread can continue and then read the resource.

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter == -1) Wait(m, read_phase);

resource.read();

if(resource_counter == 0) Signal(write_phase);

}

However, this can have a problem. Suppose we have two reading threads and all of them receive a read_phase signal from the writer (by Broadcast). Then they are all going to read from the resource. Suppose one thread finishes the reading first and the other finishes the reading later, then two write_phase signals will be sent in turn. When the writer receives the signal write_phase from the first reading thread, the second one is still reading. This is where we will have a concurrency problem.

So what we can do is that we can separate actually access to the file from the control blocks. Thus, if there are more than 1 reading threads executing, we will not send the write_phase signal. This can be implemented by,

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter == -1) Wait(m, read_phase);

resource_counter++;

}

// access resource for reading

resource.read(); // we don't use a mutex here for multi threads

lock(m) {

resource_counter--;

if(resource_counter == 0) Signal(write_phase);

}

For the writer, this can be quite similar. We have to wait for the signal write_phase when the counter is not 0 and we have to set the counter to -1 before we write to the resource.

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter != 0) Wait(m, write_phase);

resource_counter = -1;

}

Then we can write to the resource. After the writing thread writes to the resource, it updates the resource counter to 0 so that the resource is now free. And finally, it will broadcast to all reading threads the read_phase signal and then we will signal to the write_phase.

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter != 0) Wait(m, write_phase);

resource_counter = -1;

}

// access resource for writing

resource.write(...)

lock(m) {

resource_counter = 0;

Broadcast(read_phase);

Signal(write_phase);

}

(4) Critical Section

In general, for the previous case, the reader threads should be,

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter == -1) Wait(m, read_phase);

resource_counter++;

}

// access resource for reading

resource.read();

lock(m) {

resource_counter--;

if(resource_counter == 0) Signal(write_phase);

}

And the writer threads should be,

Condition read_phase, write_phase;

int resource_counter = 0;

Mutex m;

lock(m) {

while(resource_counter != 0) Wait(m, write_phase);

resource_counter = -1;

}

// access resource for writing

resource.write(...)

lock(m) {

resource_counter = 0;

Broadcast(read_phase);

Signal(write_phase);

}

So what are the critical section for the readers and the writer? We have known that the critical section is a code segment where the shared variables can be accessed and the shared resource can be accessed only when we read or write to the shared resource. Therefore, the critical section in the case above is the bolded content of resource.read(); and resource.write();.

In general, the code segment before the critical section is called the enter critical section and it is sort of like a lock operation that we have to perform before accessing a resource. The code segment after the critical section is called the exit critical section and it is also sort of like an unlock operation that we have to perform after accessing a resource.

The enter critical section has the structure of,

// ENTER CRITICAL SECTION

Lock(Mutex) {

while(predicator != access_ok) Wait(Mutex, Condition);

predicator = new_state;

}

The exit critical section has the structure of,

// EXIT CRITICAL SECTION

Lock(Mutex) {

predicator = updated_state;

Broadcast/Signal(Condition_Wait_Threads);

}

And the main code segment should be,

// ENTER CRITICAL SECTION

read/write(shared_file); // CRITICAL SECTION

// EXIT CRITICAL SECTION

(5) Tips for Avoiding Common Mistakes of Mutex and Condition Variables

- Keep track of every mutex or condition variables used with a resource. For example,

Mutex m1; // mutex for file 1

- Check that you are always using lock and unlock. Don’t eat one of them. The compiler will always generate some warnings of the half-locked resource.

- Use a single mutex for a single resource. For instance, if we use both

Mutex m1;andMutex m2;for the access of the same file. If two threads lock this resource bym1andm2, respectively, then these 2 threads could run concurrently, and these mutexesm1andm2are useless. - Check that you are signaling the correct condition. Using comments when you signal or broadcast any conditions.

- Check that you are not using a

signalwhen abroadcastis needed. If you are doing so, it is potential that you are going to have deadlocks. However, when you need asignalbut instead, you use abroadcast, this can be safe (if you don’t care about the performance). So when you are not sure about which one to use, always use thebroadcast.

2. Spurious Wakeups and Deadlocks

(1) The Definition of Spurious Wakeups

The spurious wakeups happen when we wake the threads up (by broadcast or signal) knowing that they may not be able to proceed. For example, suppose we have the following writer,

Mutex m;

lock(m) {

resource_counter = 0;

Broadcast(read_phase);

// some other things here

}

And we also have the following reader,

Wait(m, read_phase);

We have known that when the reader receives the read_phase signal, it will be moved from the waiting queue and starts to re-acquire the mutex m. However, in this case, because the writer is not finished (it may have some other operations to execute) and the mutex m is not released by the writer. So even though the reader threads are woken up by the broadcast, they still have to wait for the mutex to release. This is called spurious wakeups or unnecessary wakeups.

However, the spurious wakeups will not impact the correctness of our program. Instead, it will impact the overall performance by wasted cycles for context switching. The spurious wakeups can happen only when we unlock the mutex after the broadcast or signal operations, which means that this pitfall can be eliminated if we first release the mutex (unlock) and then call the broadcast or signal. For instance, we can simply change the program in the previous case to

Mutex m;

lock(m) {

resource_counter = 0;

} // unlock

Broadcast(read_phase);

// some other things here

and then there will be no spurious wakeups.

(2) Spurious Wakeups Exceptions

However, there’s also a situation that we can not eliminate the spurious wakeup problems. Let’s see that if we have a broad or a signal call rely on a specific condition of the predicator, we can not release the mutex m because then the value of the predicator can be changed by some other threads. For example,

Mutex m;

lock(m) {

resource_counter--;

if(resource_counter == 0) signal(write_phase);

} // unlock

This would not be possible if we move the if(resource_counter == 0) signal(write_phase); out of the lock and release the mutex before we run this operation.

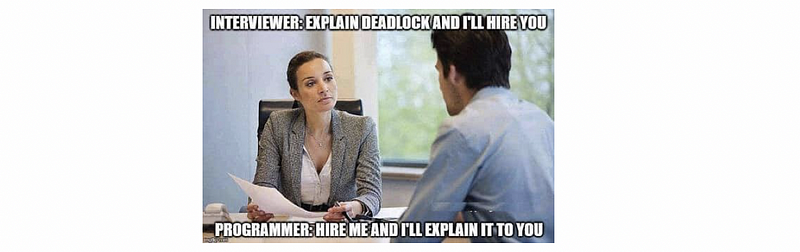

(3) The Definition of Deadlocks

One of the scariest problems related to multi-threading is the deadlock. The deadlocks happen when two or more competing threads are waiting on each other to complete, but none of them ever do. So the execution of the process overall of all these threads is stuck and it can’t continue.

Let’s see a joke about the deadlock. In this joke, the interviewer is waiting for the interviewee to explain the deadlock first, while the interviewee is waiting for the interviewer to hire him/her first. So both of them are waiting for each other to respond and none of them actually do anything. This analogy perfectly describes the situation of a deadlock.

(4) Deadlock: An Example

Let’s now see an example of the deadlock. Suppose we have got two threads and both of these two threads call a function foo that requires resource A and resource B. In order to call this function, thread 1 first lock the resource A , and then the resource B. However, thread 2 first lock the resource B , and then the resource A. So this will be,

/* T1 */ /* T2 */

// do something // do something

lock(mutex_A) { lock(mutex_B) {

// do something // do something

lock(mutex_B) { lock(mutex_A) {

// do something // do something

foo(A, B); foo(B, A);

} }

} }

When thread 1 locks mutex_B, it may be blocked because thread 2 might lock this the mutex_B before. In addition, when thread 2 locks mutex_A, it may be blocked because thread 1 might lock this the mutex_A before. If both of the situations happen, thread 1 will be waiting for thread 2 to release the mutex_B , while thread 2 will be waiting for thread 1 to release the mutex_A. You can see here is a deadlock!

(5) Deadlock Solution 1: Fine-Grained Locking

The simplest idea to deal with a deadlock is that can release some mutex so there will not be any deadlocks. For example, for the following code, we can discover that the lock(mutex_B) is not necessary for the function call foo2 so we can simply release this lock. This is called fine-grained locking.

/* T1 */ /* T2 */

// do something // do something

lock(mutex_A) { lock(mutex_B) {

// do something // do something

lock(mutex_B) { lock(mutex_A) {

// do something // do something

foo(A, B); foo2(A);

} }

} }

Then after the fine-grained locking,

/* T1 */ /* T2 */

// do something // do something

lock(mutex_A) { lock(mutex_B) {

// do something // do something

lock(mutex_B) { }

// do something lock(mutex_A) {

foo(A, B); // do something

} foo2(A);

} }

However, our case is different because we need the resource A and B both in thread 2. Thus, the fine-grained locking will not be useful.

(6) Deadlock Solution 2: Mega Locking

Another idea is that we can lock both A and B at the same time, and this is called a mega lock. To implement this, we can replace mutex_A and mutex_B with a mega mutex mutex_AB. After mega locking, the program will then be,

/* T1 */ /* T2 */

// do something // do something

lock(mutex_AB) { lock(mutex_AB) {

// do something // do something

foo(A, B); foo(B, A);

} }

Then in this application, the deadlock can be dealt with perfectly. However, for some other applications, we may create some mutex that can be too restrictive (i.e. mutex_ABCDEFG…) and this limits the parallelism and reduces the performance of the system.

(7) Deadlock Solution 3: Maintaining the Locking Order

There is another solution that is widely used. In this solution, we just need to maintain the order of the mutex for each thread. For example, if we restrict that all the threads must lock mutex_A before they call mutex_B, then the program will be,

/* T1 */ /* T2 */

// do something // do something

lock(mutex_A) { lock(mutex_A) {

// do something // do something

lock(mutex_B) { }

// do something lock(mutex_B) {

foo(A, B); // do something

} foo2(A);

} }

After this solution, there will be no potential deadlocks in this program.

(8) Deal with the Real Deadlocks

Note that we have talked that we can prevent deadlocks by locking order, mega locking, etc. But these methods can be quite expensive for the performance.

We can also do deadlock detection and recovery. But this requires us to design a rollback mechanism which can also be costly.

In reality, we will actually apply the Ostrich Algorithm! In computer science, the ostrich algorithm is a strategy of ignoring potential problems on the basis that they may be exceedingly rare. We just take an optimistic view that the deadlock can be rare and it not happens to me and then we hide our head in the sand just like an ostrich. So what if we have a real-time deadlock problem? Just reboot the machine! Remember that rebooting is our best friend of debugging.