Operating System 18 | Inter-Process Communication, Pipe, Message Queue, Socket, Shared-Memory IPC …

Operating System 18 | Inter-Process Communication, Pipe, Message Queue, Socket, Shared-Memory IPC and Its Synchronization

- Inter-Process Communication

(1) The Definition of Inter-Process Communication (IPC)

Inter-Process Communication (aka. IPC) refers to a set of OS-supported mechanisms used for interaction (e.g. coordination or communication) among processes.

(2) Types of IPC

IPC mechanisms are broadly categorized as message-based or memory-based. Examples for these two categories are,

- Message-based (message pass): sockets, pipes, message queues

- Memory-based (shared memory): shared memory, memory-mapped files

2. Message-Passing IPC

(1) The Definition of Message Passing IPC

One model of IPC is called the message passing IPC or message-based IPC. As its name implies, processes create messages and then send or receive them.

(2) The Definition of Channel

The OS is responsible for creating and maintaining the channel that will be used to pass messages among processes. The data structure of the channel can be a buffer or a FIFO queue.

(3) The Definition of Ports

The OS also provides some interfaces to the processes so that they can pass messages via the channel. These interfaces are called ports. The sending process send/write a message to the port of the receiving process, and the receiving process then recv/read from the port where a message is received. The channel is responsible for passing the message from one port to another.

(4) OS Kernel Responsibilities for IPC

In the IPC process, the OS kernel is required to,

- establish the communication channel

- perform every single IPC operation: this means that both the send and receive operations require a system call and a data copy

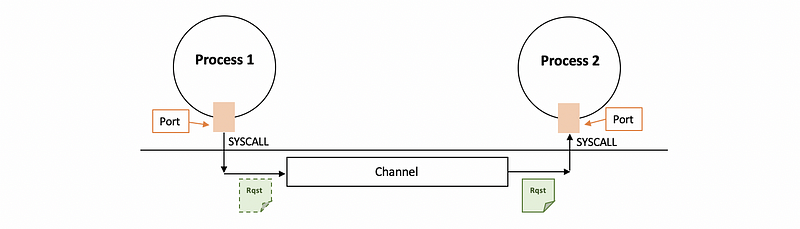

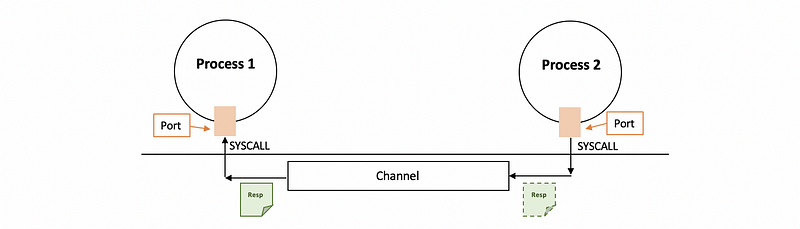

(5) Request-Respond Interaction

Now, let’s see the most simple request-respond model between two processes. Suppose we have 2 processes, and one is going to send a request to the other, and the other one will respond after it receives the message.

From the diagrams, we can discover that a simple request-respond model will require 4 system calls, 4 user-kernel crossings, and 4 data copies.

(6) Message-Passing IPC Evaluation

In the message-passing IPC, the downsides are,

- overheads for kernel-user crossings

- overheads for copying the data

But there is also an advantage,

- simplicity: the kernel takes all the IPC operations including the channel management and the synchronization

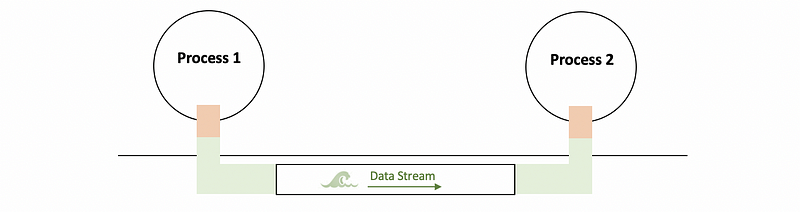

(7) Pipes

The most simple form of the message passing IPC is called pipes. Pipes are characterized by two endpoints so that only two processes can communicate at a time. There is no notion of the message in the pipe, and there will just be a stream of bytes that are pushed into the pipe from one process to another.

One popular use of pipes is to connect the output from one process to the input of another process. So the entire byte stream that’s produced by process 1 will be delivered as input to process 2.

The pipe is also part of the POSIX standard.

(8) Message Queue

A more complex form of message passing IPC is the message queue. As the name suggests, the message queues understand the notion of messages that they transfer. Thus, a sending process must submit a properly formatted message to the channel, and then the channel will deliver a properly formatted message to the receiving process. The OS level functionality regarding message queues also includes things like understanding priorities of messages or scheduling the way messages are being delivered.

The use of message queues is supported through different APIs including the POSIX API and the Sys V API in a UNIX-like system.

(9) Socket

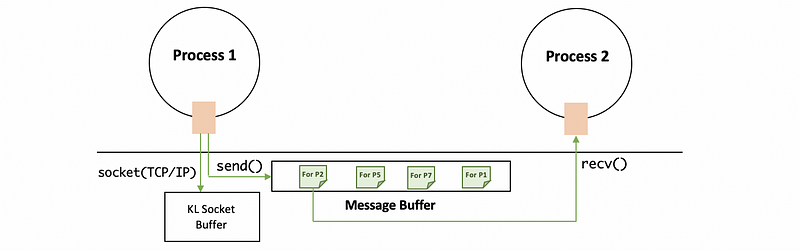

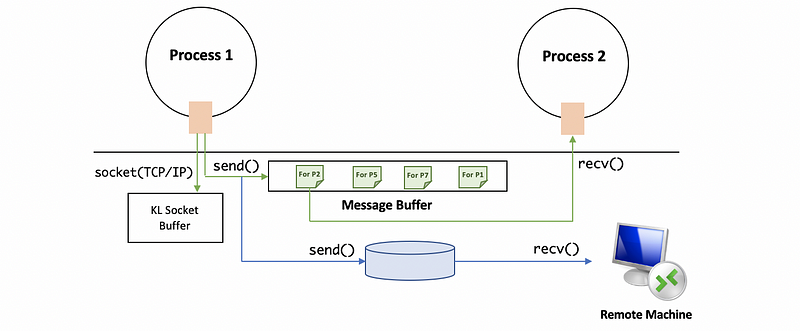

Another IPC that we have talked about in socket programming is the socket. With the socket form of IPC, the notion of ports that’s required in message-passing IPC mechanisms, that is the socket abstraction that’s supported by the OS.

With sockets, processes send messages or receive messages via the socket AP and it supports send() and recv() to send or receive messages to or from the message buffer.

The socket() call itself creates a kernel-level socket buffer, and it will associate any necessary kernel-level processing that needs to be performed along with the message movement. For instance, the socket may be assigned as a TCP/IP socket.

Sockets, as you probably know, don’t have to be used for processes that are on a single machine. If the two processes are on different machines, then this channel is essentially between a process and the network device that will actually send the data.

3. Shared-Memory IPC

(1) The Definition of Shared-Memory IPC

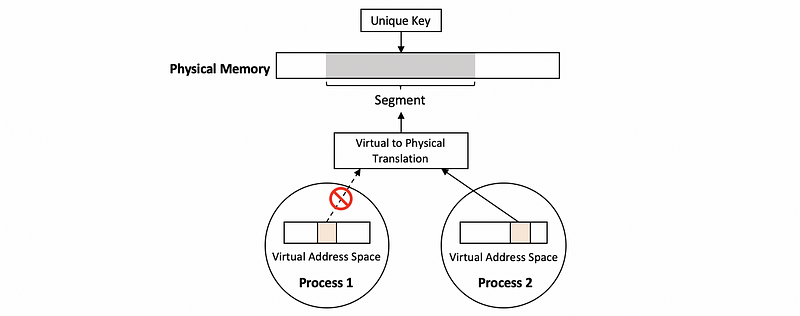

The shared memory IPC means that the processes read and write to a shared physical memory region. The OS is involved in establishing the shared memory channel between the processes, this is implemented by the virtual to physical translation.

(2) Shared-Memory IPC Implementation

To have a shared physical memory region between two processes, the OS will map certain physical pages of memory into the virtual address spaces of both processes.

The physical memory that’s backing the shared memory buffer does not have to be a contiguous portion of physical memory. All of this leverages the memory management support that’s available in operating systems and on modern hardware.

(3) Shared-Memory IPC Evaluation

There is one big benefit of this approach: once the physical memory is mapped into both address spaces, the OS doesn’t need to be used for maintaining the communication. This brings us some advantages,

- system calls are only for setting up the shared space

- data copies will be significantly reduced

However, because we are changing the data on the same physical region, there must be some downsides. For example, we need

- explicit synchronizations

- complex communication protocol

- to manage the shared buffer manually

(4) Shared-Memory IPC APIs

For Unix-like platforms, we have,

- POSIX APIs

- Sys V APIs

And for Android platforms, we have,

- Ashmem

(5) Message Passing IPC Vs. Shared Memory IPC

In message passing,

- CPU is involved in copying the data with kernel-user crossings and we need to pay the cost of CPU cycles for this

- Good for sending a small amount of data

In the shared memory case,

- CPU cycles are spent mapping the physical memory into the appropriate address spaces

- CPU cycles are also spent copying the data into the channel but without kernel-user crossing

- A high cost of setup the V2P translation

- Good for using many times after setups

- Perform well for using fewer times after setups

- Good for sending large amount of data

(6) Local Procedure Calls

In Windows systems, if the data that needs to be transferred among address spaces is smaller than a certain threshold, then the data is copied via ports. Otherwise, the data is potentially copied once to make sure that it’s in a page-aligned area and then that area is mapped into the address space. This mechanism that the windows kernel supports is called local procedure calls (aka. LPC).

4. Sys V APIs

(1) The Definition of Segments

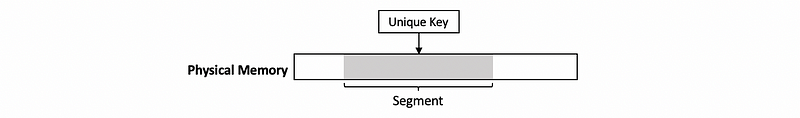

The OS supports segments of the shared memory that don’t need to be contiguous physical pages. These segments are system-wide and there is a limit for the total number of these segments. In today's Linux system, this is not an issue because we can use up to 4,000 segments. However, in the past, we can only use 6 segments.

(2) Create Segments

When a process requests that a shared memory segment is created, the OS allocates the required amount of physical memory. If provided that certain limits of the segments are met, then it assigns to it a unique key. This key is used to uniquely identify the segment within the OS, and any other process can refer to this particular segment using this key.

shmget(shmid, size, flag)(shared memory get) is used to create or open a segment of the appropriate size.shmidis the unique key that must be explicitly assigned by the application.ftok(pathname, proj_id)is used to generate the unique key. This function will generate a token based on its arguments, and it will generate the same keys if we pass the same arguments. This is like hashing.

(3) Attaching Processes

After the segment is created, using the key of this segment, the OS establishes valid mappings between the processes’ virtual address space and the physical memory that backs the segment. Multiple processes can attach to the same shared memory segment so that these processes end up sharing access to the same pages. Reads and writes to these pages will be visible across all the processes.

shmaddr = shmat(shmid, addr, flags)(shared memory attach) is the call that attaches the shared memory segments into the virtual address space of the process.addris the specific virtual address that the programmer can set for mapping, if it is set to NULL, then the program will arbitrarily select the virtual address for mapping. This function will return the virtual memoryshmaddrthat can be used for any data type, then the programmers have to decide which type they will use.

(4) Detaching Processes

Detaching a process from a segment means invalidating the address mappings for the virtual region that corresponded to that segment within the process. In other words, the page table entries for those virtual addresses will no longer be valid. However, a segment isn’t really destroyed once it’s detached. In fact, a segment may be attached and detached and reattached multiple times by different processes during its lifetime. What this means is that once a segment is created, it’s like a persistent entity until there’s an explicit request for it to be destroyed.

shmdt(shmaddr)(shared memory detaching) means to detach the segment with the process. The process with a virtual address ofshmaddrcan not be map to the shared memory anymore.

(5) Destroy Segments

The segment is destroyed only when it’s explicitly deleted or when there is a system reboot. This makes it very different than regular non-shared-memory, which is allocated by malloc and it will disappear as soon as the process exits.

shmctl(shmid, cmd, buf)(shared memory controller) can be used to destroy a segment. The segment will be removed when thecmdargument is assigned toIPC_RMID(IPC remove by ID).

4. POSIX API for Shared Memory

(1) Basis of the POSIX API

Although the POSIX API is the standard API, it is not as widely supported as the Sys V API. The main difference between the POSIX API and the Sys V API is that the POSIX use files instead of segments. So, every shared memory will be identified with a file descriptor. This file is not like a real file in the disk, it is actually the concept that exists in the temporary file storage paradigm system (aka. tmpfs).

- Create Files

We would use shm_open() for creating the shared memory and it returns a file descriptor.

- Attach Processes

We would use mmap() to attach the file descriptor of the shared memory to the virtual address space of a process.

- Detach A Process

We would use unmmap() to detach the file descriptor of the shared memory to the virtual address space of a process.

- Detach all the Processes

We can also detach all the processes related to the file descriptor of shared memory by shm_close(). By this means, the file descriptor will be removed from the address space and it can be no longer used.

- Destroy Files

The shm_close() only removes the file descriptor, but all the data structures related to the file descriptor will be left for us. To deal with this problem, we can use shm_unlink() to eliminate all the information related to the file descriptor and also free the memories related.

5. Synchronization for Shared Memory

(1) Why We Need Synchronizations?

Because all these processes can have access to the same physical address space, then we have to consider the synchronization (e.g. mutexes and condition variables) for processes.

(2) Options for Synchronizations

On one hand, we can synchronize the shared memory based on the mechanisms supported by the process threading library (i.e. PThread Library). Or on the other hand, there are also some methods provide by the OS that can be used for synchronization.

However, regardless of the method we have chosen for synchronization, there must be a synchronization on the number of concurrent accesses to the shared memory and we should also be awared of when the data is available and ready for consumption.

(3) Shared Memory Data Structure

Now, let’s see an implementation of the shared memory data structure. Except for the data that must be included in the shared memory data structure, we also need to assign a mutex for this shared memory. Thus, we can define this data structure by,

typedef struct {

pthread_mutex_t mutex;

char *data;

} shm_data_structure, *shm_data_structure_t;(4) Initalize the Shared Memory

Now that we have got the data structure of the shared memory, what we haven’t got is a real instance of the shared memory. As we have discussed, we can initialize a shm_data_structure instance with the Sys V APIs.

key = ftok(argv[0], 120);

seg = shmget(key, 1024, IPC_CREATE | IPC_EXCL);

shm_address = shmat(seg, NULL, 0);

shm_ptr = (*shm_data_structure_t) shm_address;

In the end of this code, we will have a pointer shm_ptr pointing to the shared memory of the type shm_data_structure.

(5) PThread Synchronization for IPC

Now, let’s see how we can synchronize this shared memory with PThread. PThread provides mutex data type pthread_mutexattr_t and condition variable data type pthread_condattr_t , especially for the shared memory. Before we use a mutex for the shared memory, we can first create it by,

pthread_mutexattr_t m_attr;We have talked that the mutex can only be valid within a process, but for IPC synchronization, we must allow the access of other process to this mutex. Thus, pthread_mutexattr_setpshared(attr, pshared)can be used to permit a mutex to be operated upon by any thread that has access to the memory where the mutex is allocated, even if the mutex is allocated in memory that is shared by multiple processes. To implement this feature, the pshared argument should be set to PTHREAD_PROCESS_SHARED. So,

pthread_mutexattr_setpshared(&m_attr, PTHREAD_PROCESS_SHARED);In the end, we can finally initialize this mutex by,

pthread_mutex_init(&shm_ptr.mutex, &m_attr);

and now it can be used for all the processes.

Note that we must keep the synchronization variables within the shared memory region (i.e. in the shared memory data structure) so that it can be shared among the processes.

6. IPC Linux Command Lines

(1) Message Queues

There are also some other ways for synchronization, for instance, the message queue and the semaphores. For the message queue, when a process sends to the shared memory, it will also send a ready to the message queue. When the other process receives the ready message, it will read the data and then send an OK message back.

(2) Semaphores

The semaphore is a 1-bit value that can be used as the mutex. We have seen an implementation of this in the midterm review. When this value is set to 0, the process's access to the shared memory will be blocked and stopped. However, when this value is set to 1, the process that wants to access the shared memory will decrement this value to 0 so that other processes will be blocked. Then the present process can proceed with its execution. After it uses this memory, this value will be increased by 1 so that other processes can have access to the shared memory again.

(3) Linux Commands

There are some Linux commands that can be used by us to see the utilization of the shared memory. The ipcs command will list all the IPC facilities including message queues, shared memory, and semaphores. And we can use the following command only to show the IPCs with shared memory,

$ ipcs -m

If we want to delete an IPC facility, we can use the ipcrm command. The ipcrm -m [shmid] can be used to delete the shared memory segment with the given shmid.