Time Series Analysis 11 | Review

Time Series Analysis 11 | Review

- True or False

- In time series analysis, we assume serial correlation (aka auto-correlation) might exist in the data.

- If there is seasonality shown in a classical decomposition plot, then the data has a significant seasonality component.

- Autoregressive models are based on the idea that the current value of the series x_t can be explained as a function of p past series values.

- We can use the general k-fold cross-validation for time series.

- A model that had the smallest MAE in one-step forecasting using rolling forward cross-validation would perform the best for multi-step forecasting as well.

- Since ETS models without damped parameter are equivalent with SARIMA models without AR component, the long-term trend forecast will be a straight line (flat, keep going up or down).

- Prophet always give more accurate forecasting than ETS or SARIMA.

- If {Xt} is stationary, then (1 − B)Xt (difference once) is still stationary.

- In ARIMA, if the series {Xt} reached stationary with d = 2, then I can choose to difference it more, aka choose d > 2.

- SARIMA models are essentially using a causal relationship to model Xt with a weighted sum of the history values. The weights update at each time point t using autocovriance.

- ETS are SARIMA models with some specific differencing and MA orders.

- Based on the definition, RMSE penalizes large errors more than MAE.

- ACF plot for a given data provides unbiased estimates of the real autocorrelations.

- We have worked on random walk S_t = X_1+…+X_t where X_t’s are Bernoulli distributed. If X_t ~IID~ Normal(1, σ²), then this random walk is stationary.

- We are able to confirm a time series is stationary if at least one zero root is outside the unit cycle.

- No data can fit an ARMA(0,0) model

- The model selection result from cross-validation might be impacted by the initial split of train and validation sets in the history data set.

- For the ARIMA(p,d,q) process, the number of parameters to be estimated in the model is p+q+d

- Consider the time series Yt = 2t + St + Zt, where St is the weekly seasonal component with m = 7, and Zt is the white noise. We don’t need to do both trend and seasonal differencing (1 − B)(1 − B^7)Yt to reach stationary, just the seasonal differencing (1 − B^7)Yt would reach stationary.

Solution:

T

F // classical decomposition will anyway show seasonality

T

F

F

T // should be some explain here

F

T

F // cause overfitting if difference it more

T

T

T

F // ACF is biased

F // expectation is not 0

F // all zero roots should be outside the unit cycle

F // think about random noise

T

F // should be p + q + 1 for with mean

T // because B^7 will include B for 1-B

2. Multiple Choice

(1) Which of the following can’t be a component for a time series plot?

- Seasonality

- Trend

- Noise

- None of the above

Solution: D

(2) Which of the following is not a necessary condition for weakly stationary time series?

- Mean is constant and does not depend on time.

- Covariance between Xt and Xs depends on s and t only through their dif- ference |s-t| (where t and s are moments in time)

- Xt need to be normally distributed.

Solution: C

(3) A stationary autoregressive process of order one is most like which of the following,

- a white noise

- a moving average process of order one

- a moving average process of order infinity

- a random walk

Solution: C

(4) Which one gives a comparison of the prediction error to the original scale of the data?

- AIC

- BIC

- RMSE

- MAE

- MAPE

Solution: MAPE

(5) Which one is the most sensitive to outliers?

- AIC

- BIC

- RMSE

- MAE

- MAPE

Solution: RMSE

(6) Which one prevents overfitting the best?

- AIC

- BIC

- RMSE

- MAE

- MAPE

Solution: BIC

(7) Suppose you are interested in forecasting annual corn production in an agricultural setting. Suppose also that in addition to data on annual corn production you also have data on annual rainfall. These variables are most likely to be related in an EXOGENOUS or ENDOGENOUS manner? The multivariate modeling strategy that is most appropriate here is VAR or SARIMAX?

- EXOGENOUS, VAR

- EXOGENOUS, SARIMAX

- ENDOGENOUS, VAR

- ENDOGENOUS, SARIMAX

Solution: B

(8) Suppose you are interested in forecasting the daily number crimes committed in San Francisco. Suppose also that in addition to daily crime data you also have daily data on the number of SFPD officers on patrol.These variables are most likely to be related in an EXOGENOUS or ENDOGENOUS manner? The mul- tivariate modeling strategy that is most appropriate here is VAR or SARIMAX?

- EXOGENOUS, VAR

- EXOGENOUS, SARIMAX

- ENDOGENOUS, VAR

- ENDOGENOUS, SARIMAX

Solution: C

(9) Suppose that the time series {Yt} exhibits monthly seasonality. Which of the following modeling approaches would be most suitable?

- A ARIMA

- B Double exponential smoothing

- C Triple exponential smoothing

- D Vector autoregression

Solution: C

3. Calculations

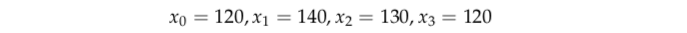

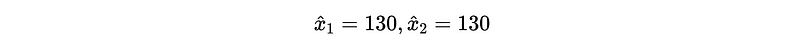

(1) For the data below, choose centered MA filter with window = 3, give the smoothed estimate of trend.

Ans:

(2) A random walk example from homework was defined as: {S_t, t = 0, 1, …, n} is obtained by cumulatively summing iid random variables. Define S_0 = 0 and

and {X_t} is an IID binary process with,

Is this random work process stationary?

Solution: No. Because,

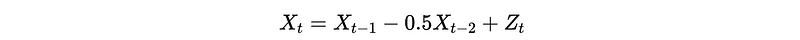

(3) Is the following AR(2) process stationary?

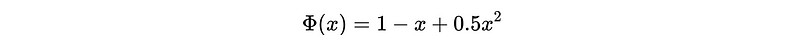

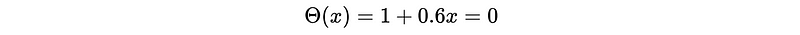

Solution: generating function

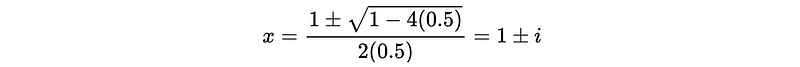

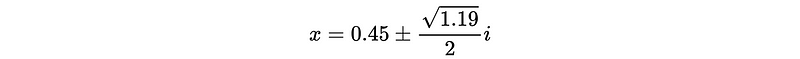

with roots,

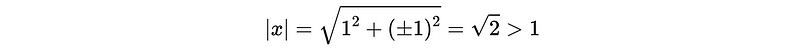

and,

so stationary.

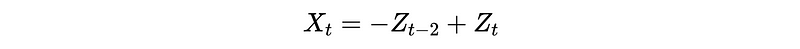

(4) Is the following MA(2) invertible?

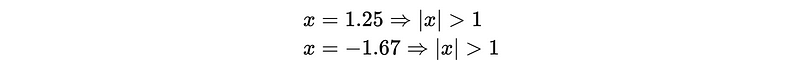

Solution: generating function

with roots,

and,

So not invertible.

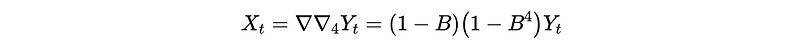

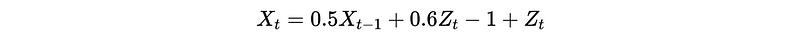

(5) Consider the time series given by,

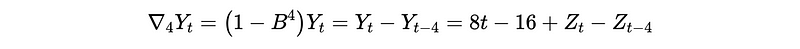

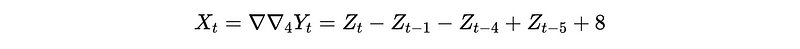

where S_t is the seasonal component with lag = 4 (Hint: assume S_t = S_{t-m}). Prove that,

is a linear combination of white noises, therefore stationary.

Solution:

Then,

Then this is a combination of white noises therefore stationary.

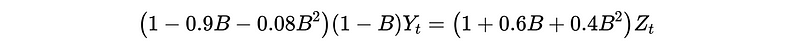

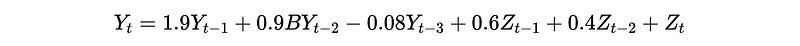

(6) For an ARMA(2,2) process,

where,

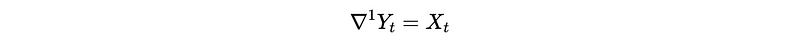

If X_t is related to Y_t as,

show explicitly how Yt depends on previous Ys and previous Zs, i.e. find the coefficients of Yt as an equation of Y_{t-1}, Y_{t-2},…, Z_t, Z_{t-1},….

Solution:

By,

We then have,

Then,

(7) Consider a VAR(2) model for Y_{1,t} and Y_{2,t}. This model can be expressed as

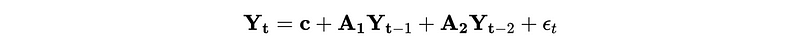

where,

and

By substituting the vectors and matrices into the equation above, explicitly write down the system of equations for Y_{1,t} and Y_{2,t} that this VAR(2) model is composed of.

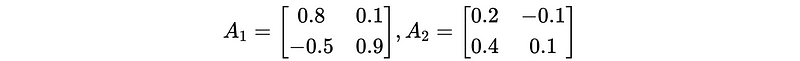

Solution:

So,

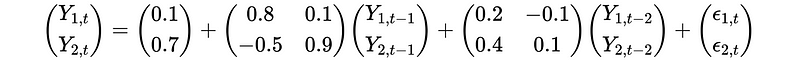

(8) A general SARIMA(p, d, q) ∗ (P, D, Q)_m model can be written as,

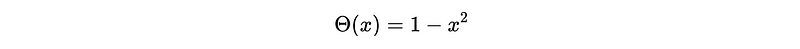

Consider a SARIMA(0,1,1)∗(1,1,0)_4 model, define d, D, m, and the generating functions ϕ(B), Φ(B), θ(B), and Θ(B) in this case.

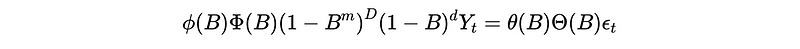

Solution:

d = 1

D = 1

m = 4

ϕ(B) = 1

Φ(B) = 1 + Φ_1 B

θ(B) = 1 + θ_1 B

Θ(B) = 1

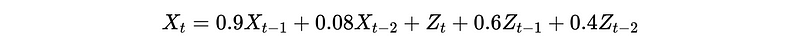

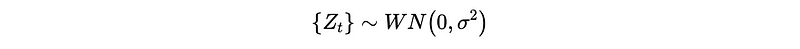

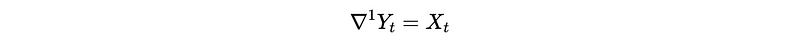

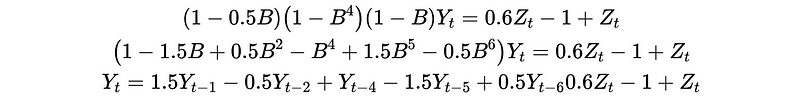

(9) {Yt} is a time series. After the trend and seasonal differencing:

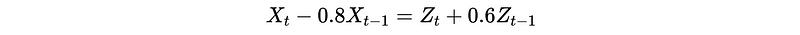

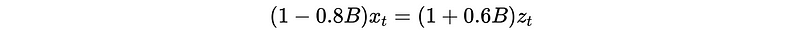

where, Xt is a stationary series ARMA(1, 1):

Based on this information, show explicitly how Yt is related to its own previous values and noises, i.e. find the coefficients of Yt as an equation of Y_{t−1}, Y_{t−2},…, Zt, Z_{t−1}, Z_{t−2}, ….

Solution:

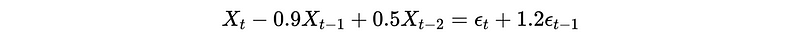

(10) For the ARMA(2,1) process,

where,

check if it is causal and invertible.

Solution:

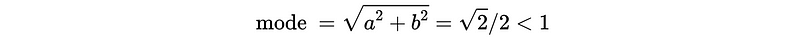

- Causal:

Roots are,

The mode is,

So not causal.

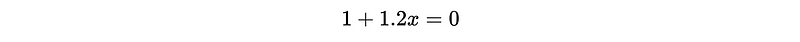

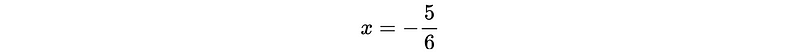

- Invertible:

The root is,

This is inside the unit circle so not invertible.

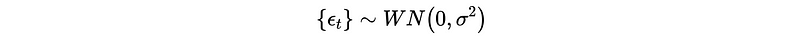

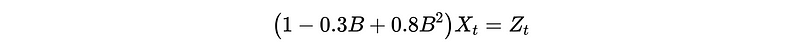

(11) For the ARMA(1,1) process,

where,

check if it is causal and invertible.

Solution:

Then,

Solve,

And,

For,

Then, it is causal and invertible.

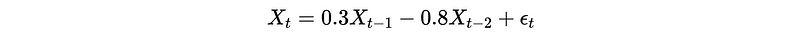

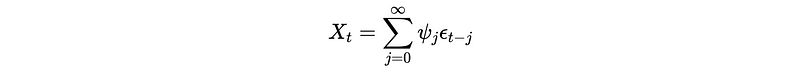

(12) For a stationary AR(2) process,

it can be expresses as a MA(∞) process:

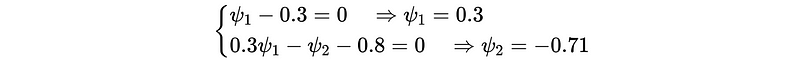

Compute the coefficients ψ1 and ψ2 in the equivalent MA(∞) expression.

Solution:

Then by MA(∞),

So,

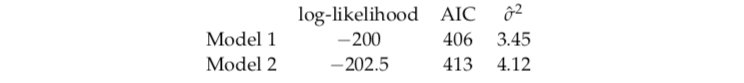

(13) Just based on the given summary of the following two models, choose one that you think might be a better choice and give the order p given q = 1 of the chosen model. Briefly justify your answer.

- (Hint: AIC = −2 ∗ loglikelihood + 2(p + q + 1))

Solution:

- Select the model with higher log-likelihood and lower AIC

- AIC = 406 = 400 + 2(p+1+1) ⇒ p = 1