Video Game Design 1 | Unity 3D Installation, Game Engine, Basic Concepts for Game Designing, ...

Video Game Design 1 | Unity 3D Installation, Game Engine, Basic Concepts for Game Designing, Game and Real World Synchronization

- Unity 3D Installation

(0) Hardware Requirements

- 64-bit multi-core processor

- 16GB memory (8GB would be okay)

- 256GB SSD (128GB would be okay)

- OS: Windows 10 or MacOS 10.12.5 or higher

- No Linux

- No x86 platforms like ARM-based Macs (M1)

(1) Download Unity Hub

Before we install the Unity 3D engine, we should first install the Unity Hub from this link.

(2) Unity Installation

Once you install Unity Hub, go to the “Installs” tab and “Add” the version listed above. You may need to visit the “download archive” shown in the Unity Hub “Add a Unity Version” dialog box in order to find the version you need. You can also refer to this link. The version we are going to use is,

2020.3.16f1 (LTS)

Make sure you install this recommended version or an above version. We should at least add the following modules,

- [Required for building] Mac Build Support (Select all)

- [Required for building] Windows Build Support (Select all)

- Documentation

- Visual Studio (Okay if you have it)

(3) Installing IDE

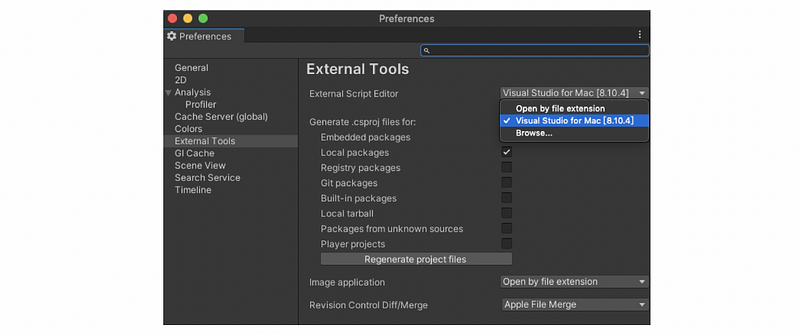

We should use Visual Studio for editing the codes, and the Visual Studio Community edition (free) is strongly recommended for both Windows and Mac. You should go to this website for downloading it. After installing Visual Studio, we have to

- Enable Visual Studio Integration: Preference > External Tools > Visual Studio

- Tools for Unity Visual Studio Extension: IntelliSense code completion, Debugger, etc.

2. Game Engine

(1) Early Game Engine

The very first versions of computer games were made at a point when the computer really wasn’t all that capable yet. So usually, it took a lot of effort to make a game. In fact, some of the earliest games involve discrete logic or boolean logic, so there’s no distinction between what was game logic and what was game data. In addition, there was a limited level of reusability.

(2) The Definition of Game Engine

The game engine is a closed-loop sensory simulation meant to convince a game player that a virtual world exists and can be interacted with, often in real-time. A simulation is based on a rapid sequence of frozen frames.

(3) The Definition of Kernel

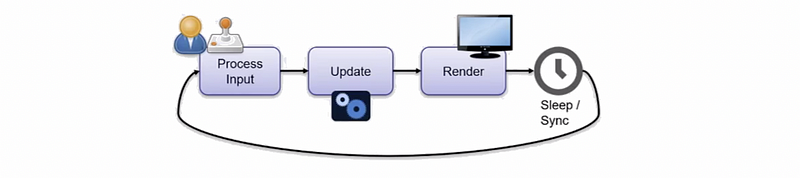

The logic component in a game was referred to as the kernel, which is actually a moment-to-moment logic that is utilized to create an interactive experience. So the kernel is a frame-based loop with the player being part of it. The kernel system works as follows (called the main loop),

- Process: processing the inputs from players

- Update: map update, enemy movements, etc.

- Render: visual, audio, etc.

- Sleep: synchronize the game world to the real world time

(4) Features of Game Engine

So let’s now look at what are the features of a game engine. No matter the GE is Unity or Unreal, there are some overlaps between them.

- creation is often declarative: programming is not necessarily needed

- extension of tools: efficiency

- underlying computational kernel

- platform abstraction

- deploy with an integrated development environment (IDE)

- support live editing (WYSIWYG)

- workflow support

- asset management

- content pipeline

- standalone

- genre flexibility (2D, 3D, RPG, etc.)

(5) Components of a Modern Game Engine

- computational kernel (main loop)

- input management: low latency, abstractions, input mappings

- rendering engine: canonical render pipeline, scene graph, space partitioning, linear math routines

- physics engine: constraint solver, simultaneous world synchronization

- audio engine

- networking module: platform abstraction, event sync, priority mgmt, prediction

- scheduling updates

- artificial intelligence module: path planning, behavior implementation, behavior planning, time independencies

- etc.

(6) Modern Game Engine

For modern game engines, they are always a software framework supporting the creation, development, and deployment of a game. Thanks to the improvement of the game engines, CAD softwares like SketchPad and Hypercard Editor were developed.

3. Basic Concepts for Game Designing

(1) Human Perception Principles

For perception, humans need some time to process visual or auditory information. Experimental results show,

- Visual stimulus reaction: 0.2 s

- Auditory stimulus reaction: 0.16 s

One important goal for game design is to fool the human senses, and we have quite a lot of methods to achieve this.

(2) Creating Animation

Consecutive similar images appear to be persistent shapes that move or change if the image change rate is fast enough. Typically, 10 FPS (frames per second) is enough for the sense of spatial presence. However, for better quality, we usually achieve more than that. For example, a camera movie can be 24 FPS, and commonly, games aim for a rate greater than 30 FPS. In reality, the performance we want to have finally decides the frame we will implement.

(3) Frozen Frame Time

A frame is frozen in time. Almost everything in a frame update shares a common time reference, and basically, that’s the start of frame processing.

The reason why frames have a frozen time is that the consistent output as all objects animates by the same amount of time. And another reason is that we can avoid potential race conditions in logic that dictates object interactions in that frozen time.

(4) Simulation Rules

Because we don’t see all the things in a frame, basically we don’t conduct a complete simulation. In fact, we only simulate what is needed most of the time. Here are some rules to reduce the simulation works,

- Only rendering the surface: no one cares about what’s inside

- Conducting frustum and occlusion culling to the objects

- We can have dynamic levels of details: more details when closer

- etc.

In conclusion, the game logic for simulation is that if something happens in the game but no one is around, then we will treat it like it doesn’t even exist.

(5) Side Effects of Detail Management

We have discussed that it will be easier if we only simulate the things around the players or the things we can see. But there are some side effects, we may expect,

- pop-in / pop-up objects and characters

- un-activated characters

- characters teleport

- glitch out of levels

- disappearing objects

- seeing inside solids

- etc.

(6) Canonical Render Pipeline

When we think about how our game renders on the screen, we are thinking about the following concepts,

- GPU: processing blueprint and the geometry of the game objects

- Output Buffer: the place to store the processed results that will finally end up on the screen (e.g. Z-Buffer: a type of data buffer used in computer graphics to represent depth information of objects in 3D space from a particular perspective)

Note that all of these works are highly paralleled by the pipeline.

(7) Frameless Rendering Vs. Traditional Rendering

There is another technique called frameless rendering, which literally means that we don’t have to use frames for rendering. The traditional rendering has a certain kind of specialty pattern especially when there’s a motion because only a limited number of pixels can be updated. Frameless rendering is often used for ray tracing (means to track the lights) because of its cost.

(8) Particle-Based Rendering Vs. Surface Rendering

Instead of rendering only the surface, we can also do the particle-based rendering. Objects rendered by particles can be used to simulate water, deformable terrain, or something that we can tear open. There is an experimental game called Jelly In The Sky, which is a tank battle game with everything that seems like jelly.

(9) Common Game Categories

- Real-time strategy (RTS)

- Shooters (FPS and TPS)

- Multiplayer online battle arena (MOBA)

- Role-playing (RPG, ARPG, and More)

- etc.

4. Game and Real World Synchronization

(1) Frame Rates

Humans can notice up to around 60 FPS for interactive synthesized animation, and a frame rate greater than 60 FPS can not be perceived by humans. An expectation that might be noticed is that the response time of the input device, which will lead to a delay in the latency. We can see an example of the frame rate impacts from this link.

(2) Sleep Time for Synchronization

After we have input from the user, we have to update based on that. The previous world should be led to a new state and then we will render it. When we are performing the update, we have a computation cost. This cost is calculated in real-world time and we have to make sure that the sleep time is in a stable and consistent way to maintain a schedule.

Typically, when we have a game of 60 Hz (FPS), that means it has 0.01667 seconds per frame, 16.67 milliseconds per frame, or 16666.67 microseconds per frame. Within this small amount of time, we usually have to do a lot of things. The goal is to get all the things done before the next frame. Even if we have done all the work in the given time, we will choose to swing for a little bit to have a constant 60 FPS.

(3) Latency Issues

We have to control the latency between the frames because when there’s a latency greater than 20 ms, the player will notice the difference. Both pipelining and caching good will be bad for latency, although they are good for throughputs. So the latency can become a serious issue.

For some game types like indirect real-time strategy games, longer latency is not a problem. However, for some other ones like VR (first-person shooting), the latency can be recognized if the response takes longer than the virtual stimulus reaction (0.2 s), and potentially, the users may get emotionally sick because of it.

(4) Ways to Reduce Latency

Here are some ways to reduce the latency. We will not introduce them in detail here.

- higher frame rate

- adaptive Vsync

- adjust pipelining

- adjust cache coalescing

- input prediction (Kalman filter)

- relaxed frustum culling

- late input update

- direct memory address

- wider memory bus